CyberSecurity Training / Awareness (Updated)

📅January, 2024

"In the electrifying realm of technology, networking acts as the intricate web stitching our digital world together, where information flows like a vibrant stream connecting devices and minds."

Explore the curated list of free cyber security certifications below:

✅1. Introduction to Cybersecurity: ✅2. Cybersecurity Essentials: ✅3. Networking Essentials: ✅4. Intro to Information Security by Udacity: ✅5. Network Security by Udacity: ✅6. Fortinet FCF, FCA:✨✨ ✅7. Information Security by OpenLearn: ✅8. Network Security by OpenLearn: ✅9. Risk Management by Open Learn: ✅10. Certified in Cybersecurity℠ - CC: ✅11. CCNA Security Courses: ✅12. Network Defense Essentials (NDE): ✅13. Ethical Hacking Essentials (EHE): ✅14. Digital Forensics Essentials (DFE): ✅15. Dark Web, Anonymity, and Cryptocurrency: ✅16. Digital Forensics by Open Learn: ✅17. WS Cloud Certifications (Cybersecurity) : ✅18. Microsoft Learn for Azure: ✅19. Google Cloud Training: ✅20. Android Bug Bounty Hunting: Hunt Like a Rat: ✅21. Vulnerability Management: ✅22. Software Security: ✅23. Developing Secure Software: ✅24. PortSwigger Web Hacking - ✅25. RedTeaming - ✅26. Splunk - ✅27. Secure Software Development - ✅28. Maryland Software Security - ✅29. Stanford Cyber Resiliency - ✅30. Cyber Threat Intelligience -✨ ✅31. ITProTV - FREE preparation Exams IT - ✅32. 15 Free CISA Courses - ☀️☀️☀️☀️☀️☀️☀️☀️ ☀️ Penetration ethical hack ✅ arcx ✅ Training ✅ ITMasters ✅ Penetration Testing ✅ TRAINING COURSES ✅ Introduction to Internet of Things ✅ CYBER TRAINING COURSES ✅ TRAINING COURSES ✅ FREE Cybersecurity Education Courses ✅ Junior Incident Response Analyst ✅ CYBER THREAT INTELLIGENCE 101 ✅ Penetration Testing ✅ TCM SECURITY - Intro to Information Security ✅ GT - Network Security ✅ Ethical Hacking Essentials (EHE) ✅ Responsible Red Teaming ✅ Developing Secure Software (LFD121) ✅ EC-Council MOON Certifications ✅ CCNA SHEET SUMMARY PDF ✅ EC-Council MOON Certifications ✅ HR Fundamentals And Best PracticesGOOGLE GROUP LISBON

📅 Google event Lx, November 10, 2017

" The best core knowledge which can’t be taught, comes from working on something of your own and share a network of collaborative ideas -->

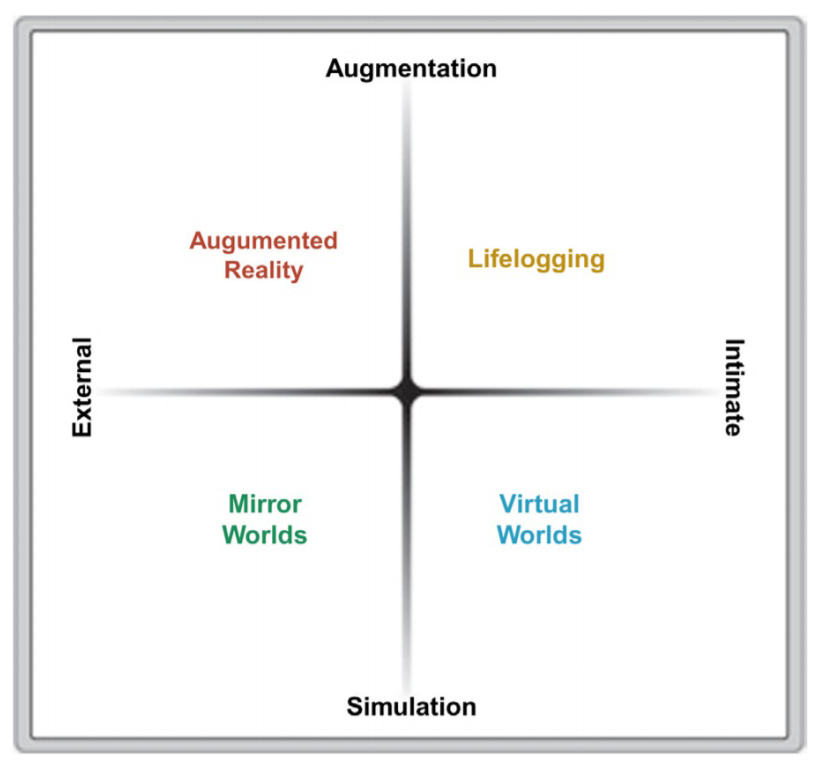

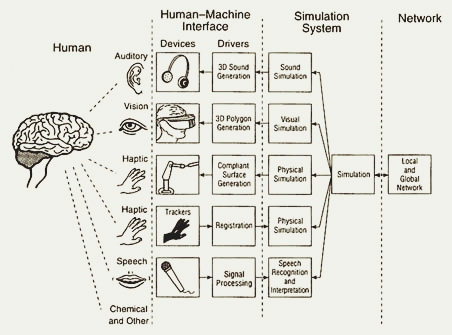

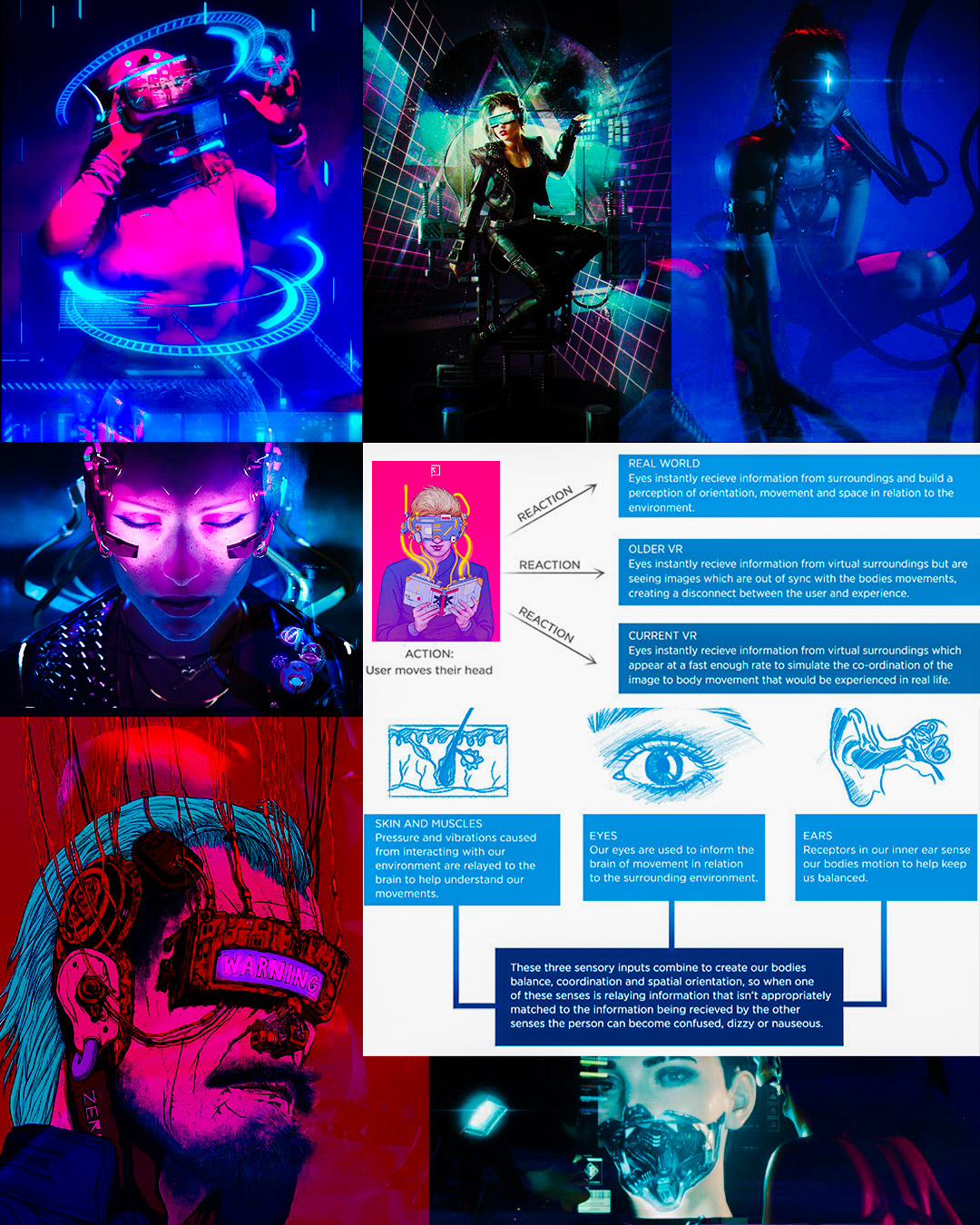

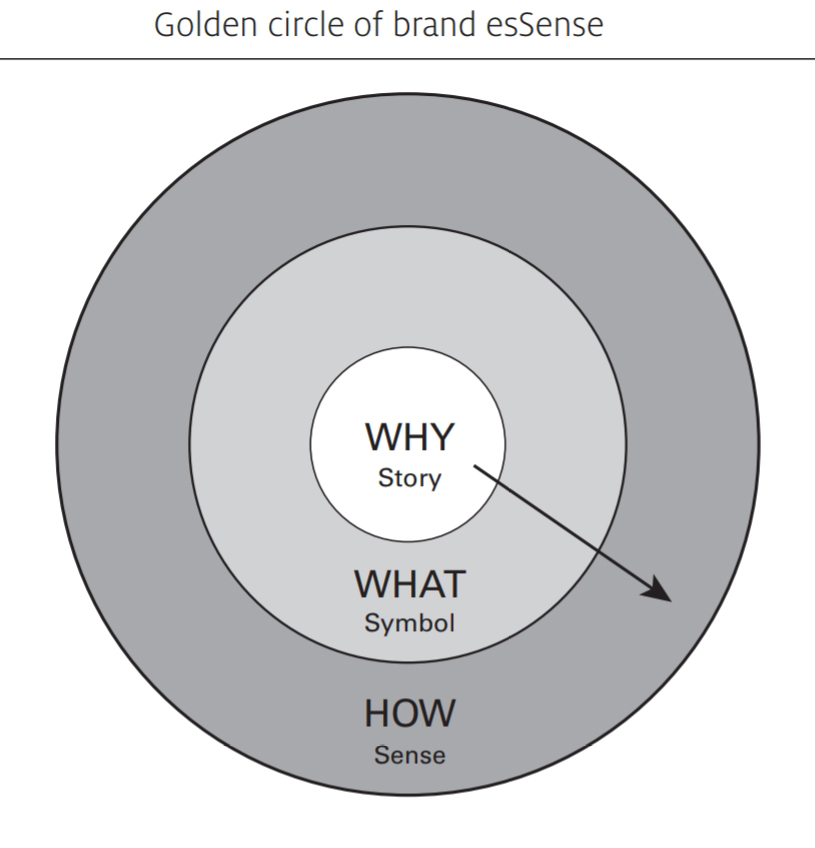

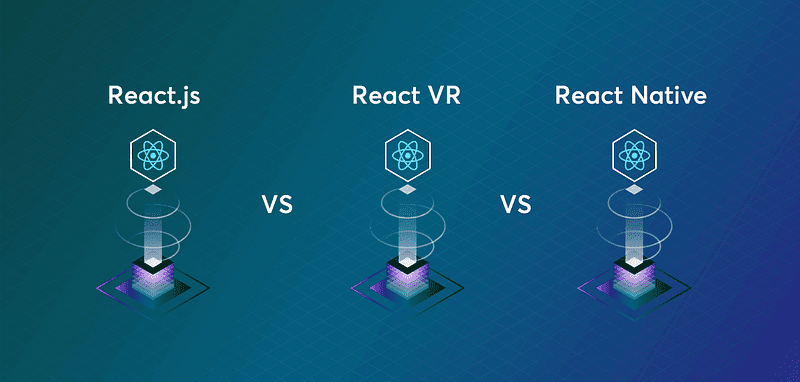

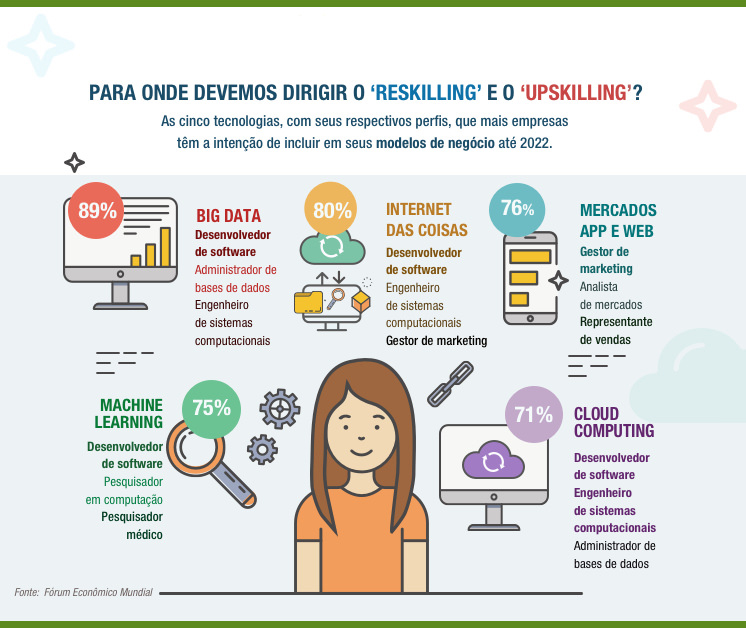

a side project from your main job to fuel your passion while learning something new. " I proactively attend events to enhance high-tech soft skills , while collaborating with like-minded individuals towards community-oriented goals. Google Group Lx - a 3 days event - focused on understanding the latest technologies and industry trends - fostering innovation and growth opportunities among participants. I recognize the importance of keeping up-to-date with industry news and continuously learning to code, regardless of the profession. Despite very few “new" technologies are a rehashed old idea, You'll want a new challenge, something that could push you well out of the comfort zone. Therefore, training initiatives should emphasize the big picture rather than individual skills that have limited applicability. The Google Lisbon event covered a wide range of topics that impact technological development. Discussions included the role of ethics, security, resources, and budget in shaping development through Spatial Computing -

evolving VR content and 360°video Ads ; Iris-finger Sensor Technology or voice recognition sdk techniques; Dynamic displays - computer vision, creative studio tools and automated vehicles ; Artificial Intelligence (AI), Machine Learning (ML), Business Intelligence (B.I), Data Science / Big Data, deep learning hardware ( NVIDIA GPU ) and software ;

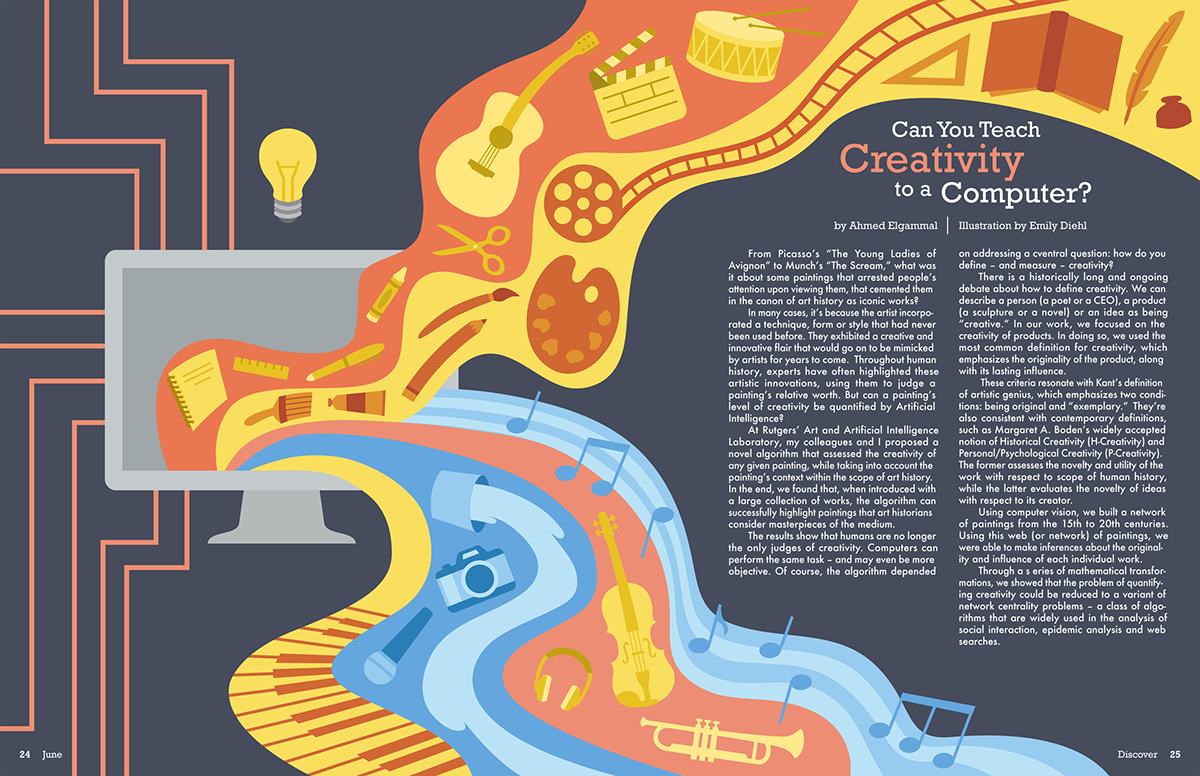

Additionally, the talks delved into AI-generated art using deep neural networks to replicate, forecast, prevent, and learn generative algorithms with adversarial networks (GANs), recreate or blend styles of artwork ( ex. Botto, AI-DA, Obvious ) ;

Other topics included IoT for Consumer & Commercial vs. Industrial ;

Google Ads & Commerce strategies to Rank Websites, search optimization & bringing in traffic -->

Besides the breakthrough advances in science and technology, such as articulating tools through big data analysis or in combination with machine learning methods, automated cameras or sensors, the discussions also focused on the future of digital security.

Topics such as Biometric identification , the potential risks of facial recognition technology and the need for stronger data protection laws.

These measures not only enhance cybersecurity but also safeguard the user's digital identity tracker .

After some point, everyone needs to be aware that all technology has security risks .

It's difficult to decide which of them is more feasible and secure, since "+" security = "-" privacy;

The talks also touched on Cybernetics & Network infrastructure - , which serve as the backbone for all wired or wireless communication, from Computer Code to Genetic Code including Bio-Engineering, Nanotechnology, IOT architectures ; machine intelligence, synthetic biology or transhumanism concepts - Flipping bits into molecules , shifting atoms - DNA data storage breakthroughs

— From the tactile mirror to the virtual body : mapping territories on packages to people - through media forensics, privacy and security surveillance reform on trust, integrity, reliability-resiliency, authenticity, compliance.

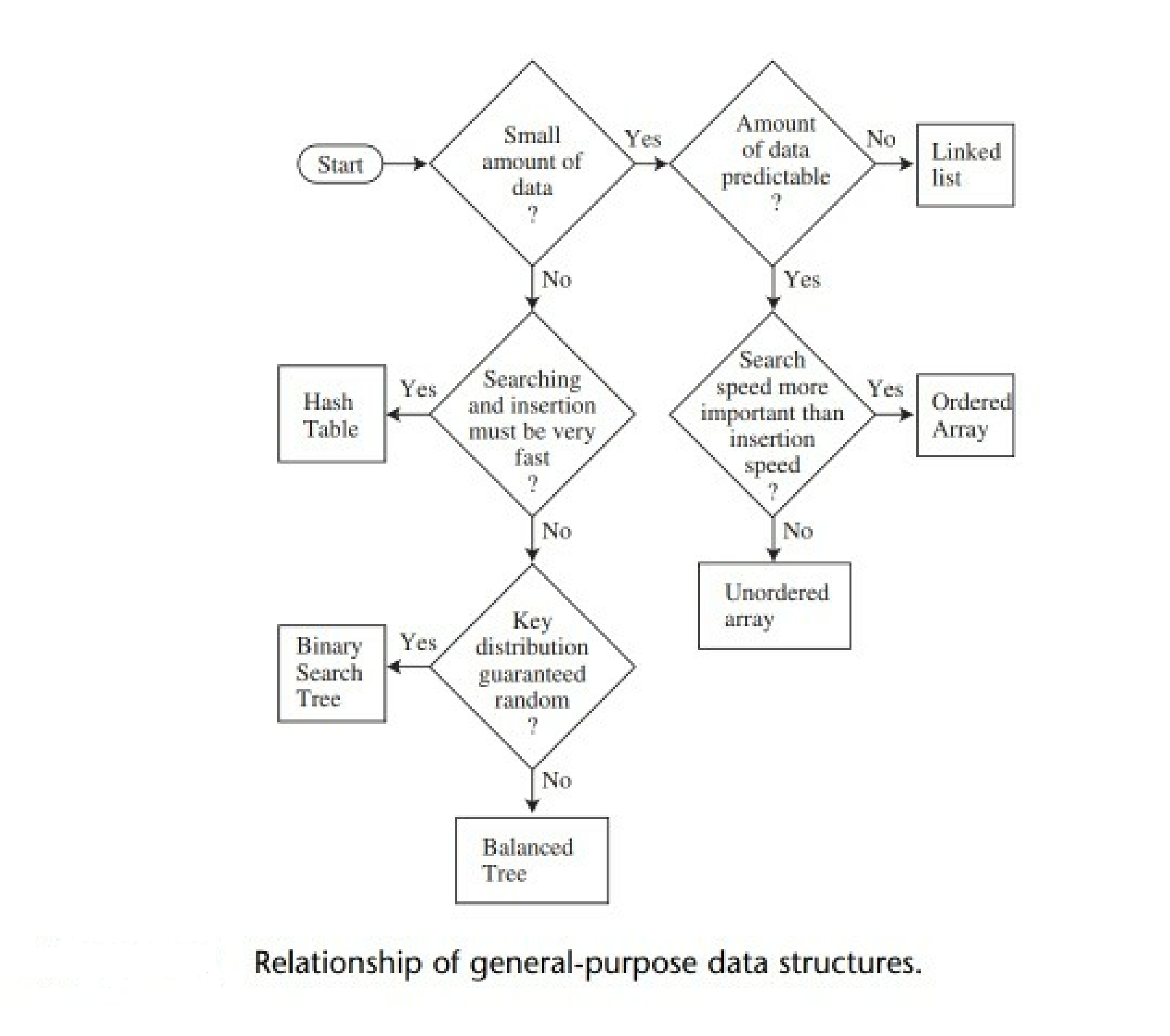

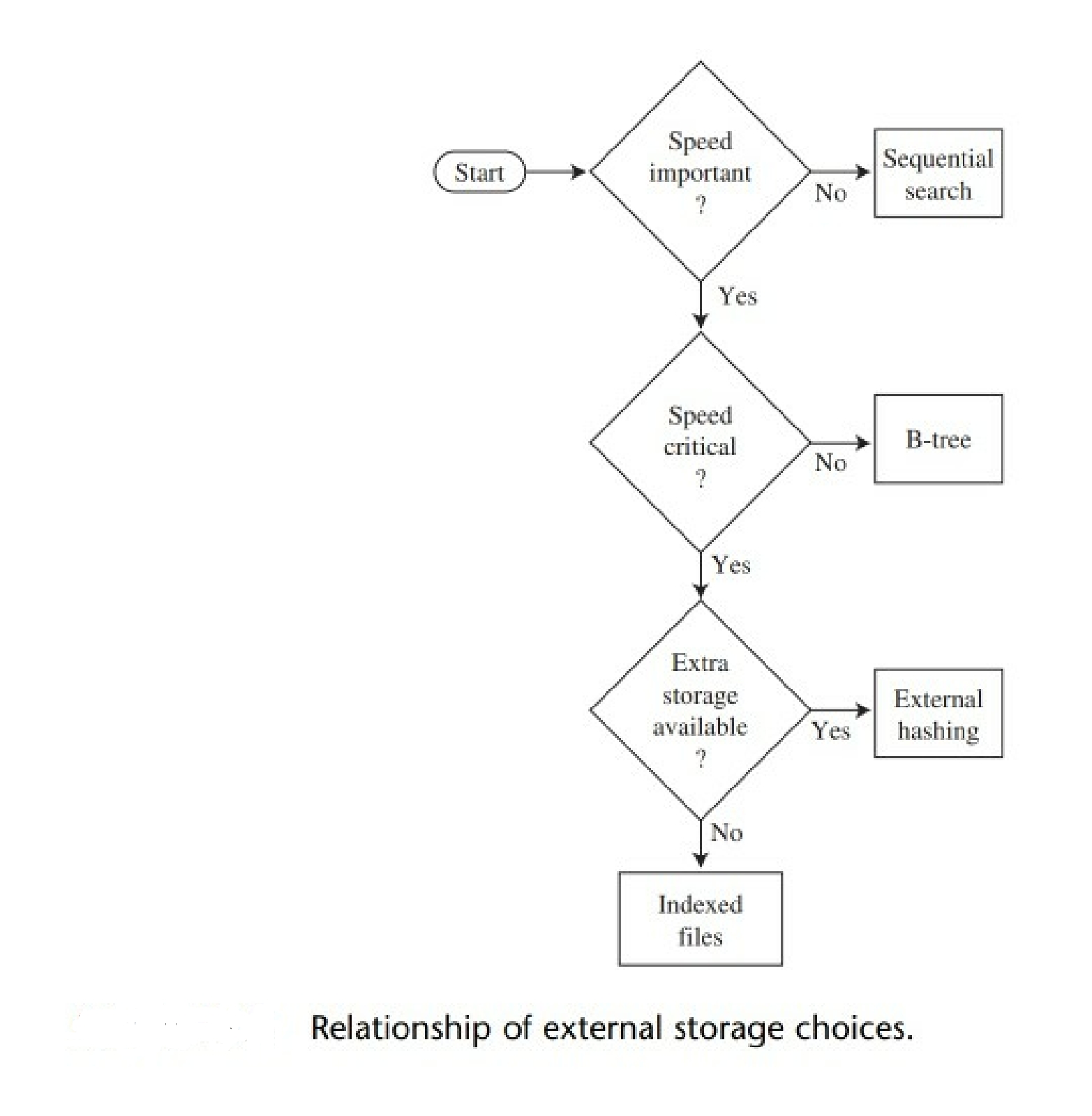

Finally, the event addressed integrating Business, IT , Virtual Infrastructure Software , Cloud Strategies , and Data structures / Algorithms .

The talks emphasized implementing strong encryption and authentication protocols, such as cryptography and data structures encryption - to protect data and ensure that it is only accessible by authorized personnel.

READ MORE --->

"In Vain have you acquired knowledge if you have not imparted it to others." Deuteronomy Rabbah

# Update Insights on August 2023

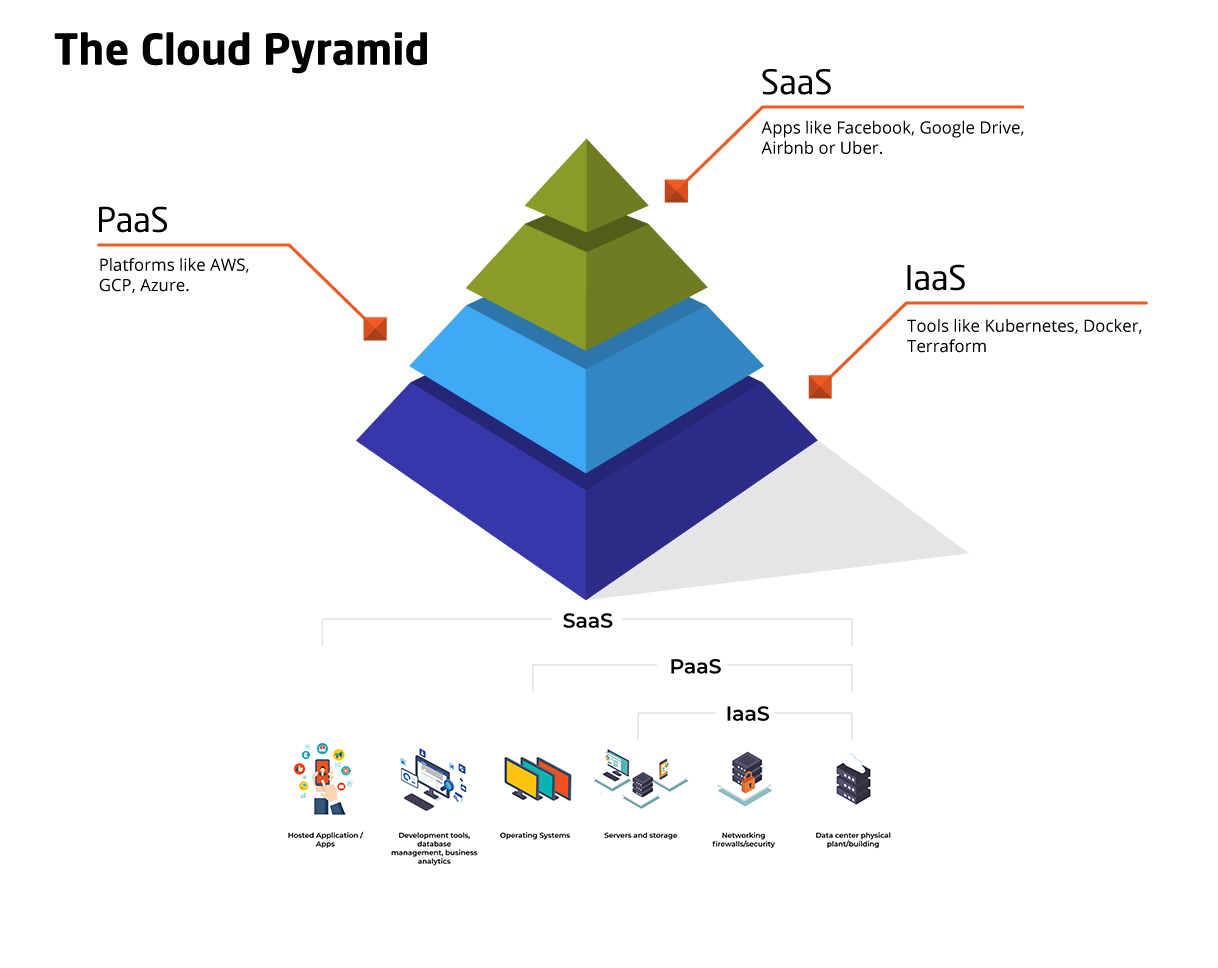

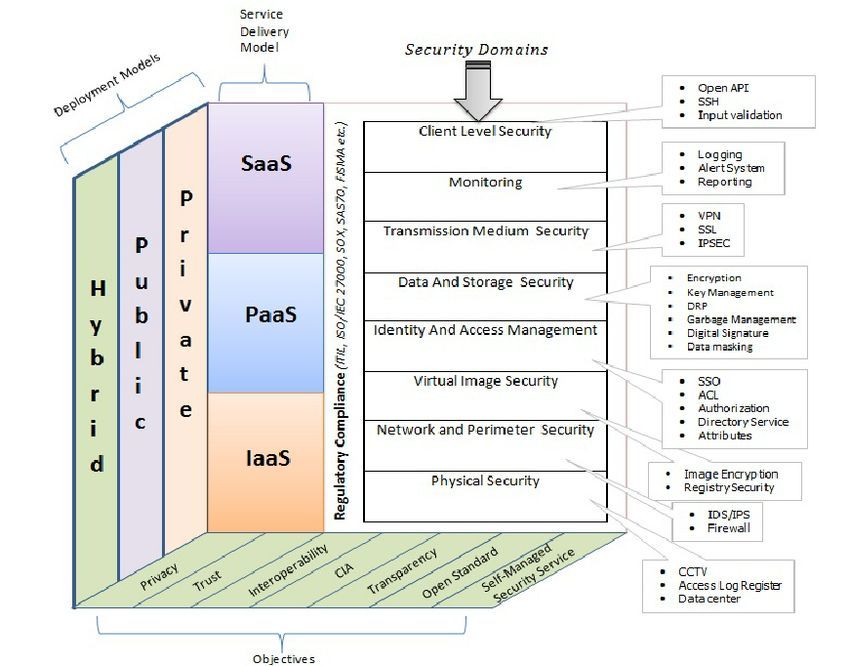

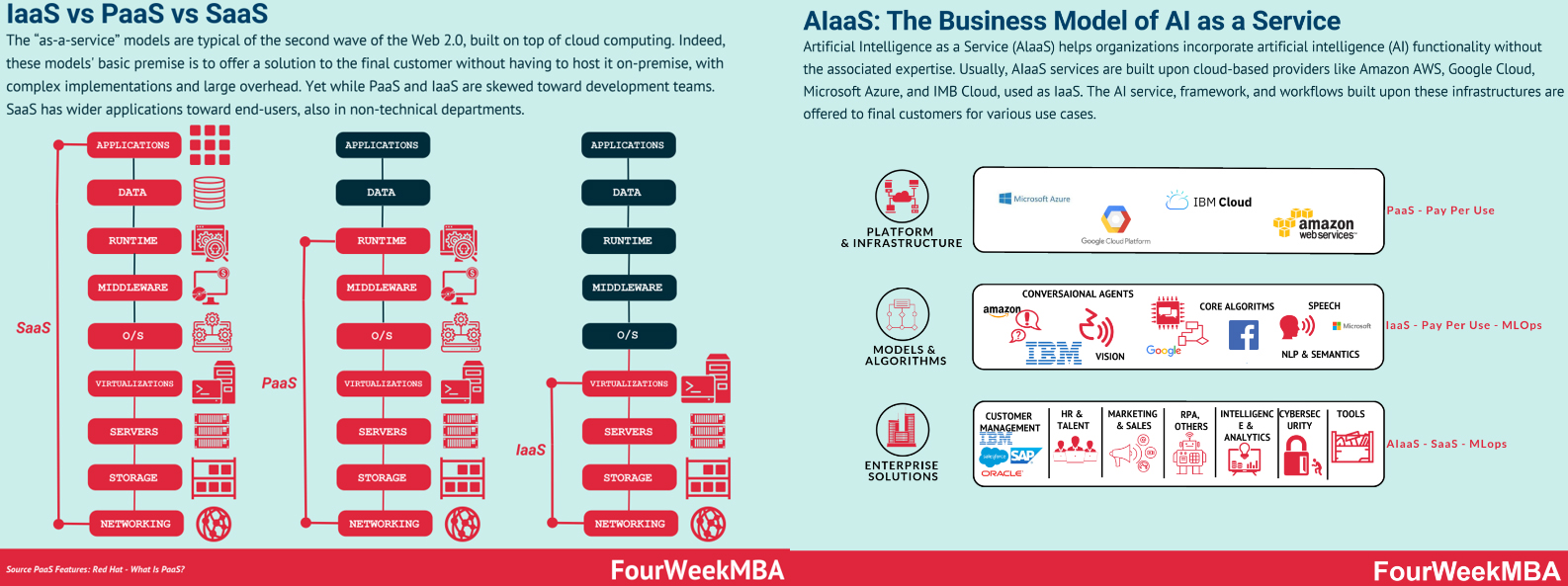

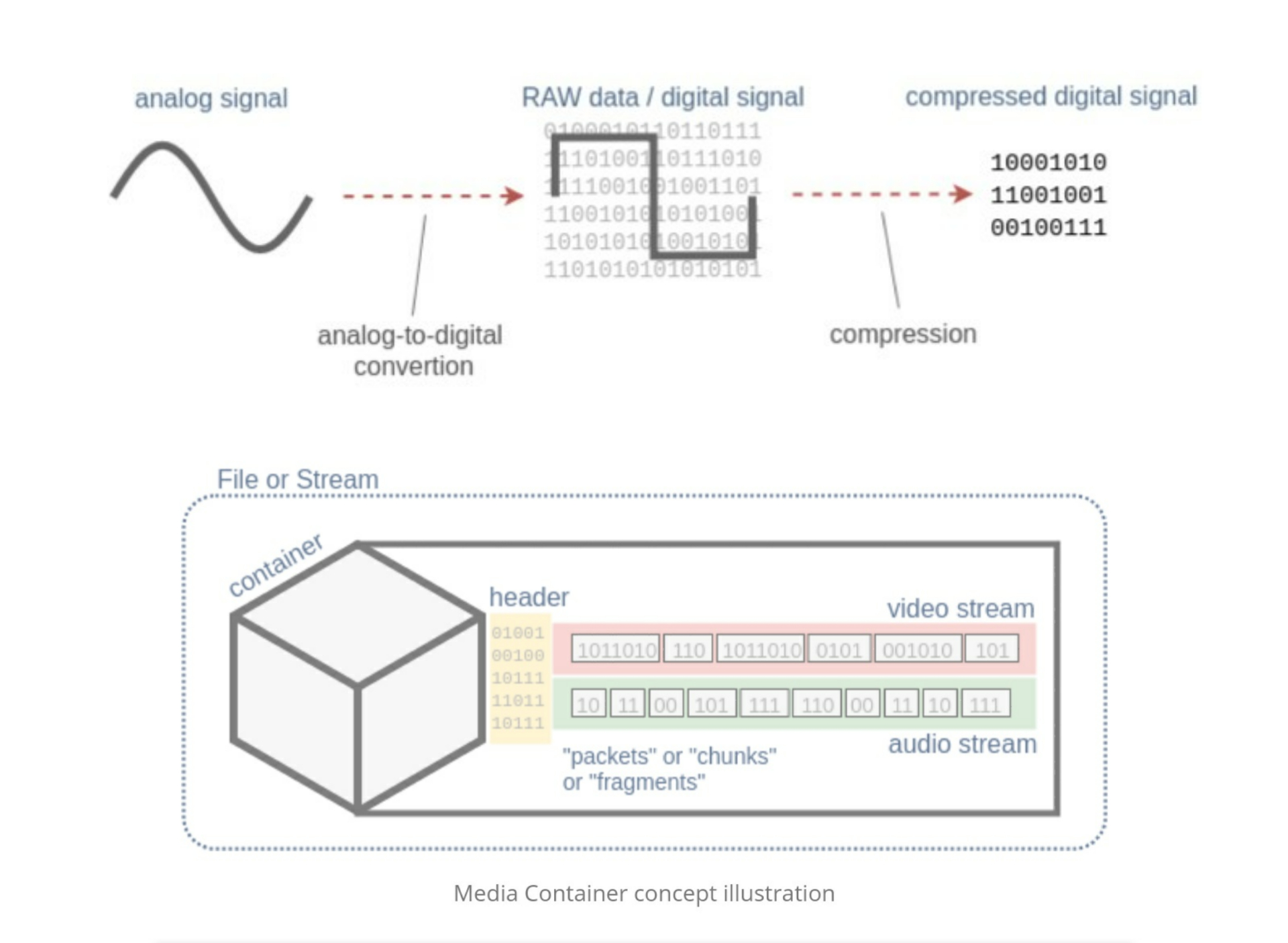

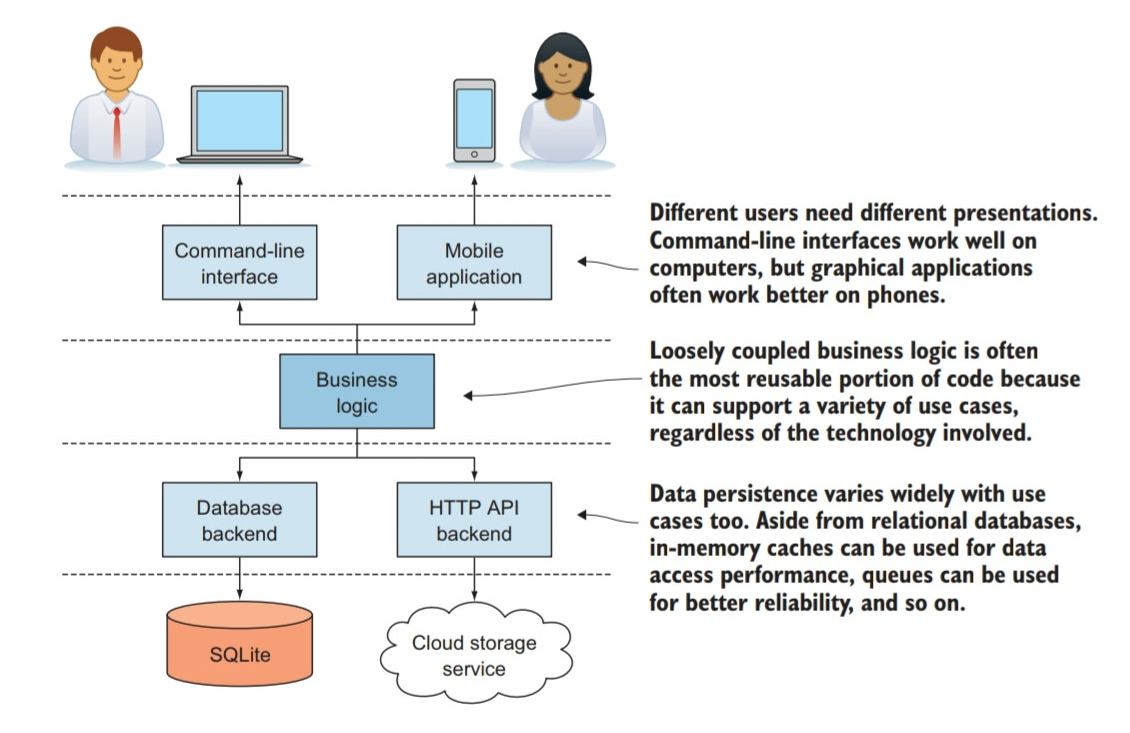

Services transformed how companies do business even after 2017. Soon after Tim Berners-Lee invented Web 1.0, he suggested people could develop the Semantic Web . The Web 2.0 progressed rapidly since 1990s and was largely driven by three core layers of innovation: mobile, social and cloud - It became popular in the mid-2000s with the rise of CSS, JavaScript, HTML file applications along with meteoric social media growth and data centers located around the world. Today, Users rely on phones, laptops, PCs, tablets, headsets, and wearables to access information daily on both Web 2.0 and Web.3.0 . Web 1.0 first-generational capabilities merely provided information to users. Browsers could only read data on a static web page without any interactivity or searchability. Web 2.0 changed this by providing significant interactivity, where users could contribute to online knowledge without any technical understanding. Web 3.0 has four foundational pillars: semantic markup, blockchain and cryptocurrency , 3D visualisation, and artificial intelligence while using the same devices as Web 2.0 and continuing to make information resources available to the world with real-time data monitoring, tracking, and immersion. It could also perform new interactions like searches, filtering results, making data entries, and others. The process of social connectedness - triggered by social media / and mobile phones browser / app development - moved from “attract” to “extract” their users; consequently, Individuals or businesses began to suffer via higher fees or platform safety risk on Cloud types. Though as-a-service types are growing by the day, there are usually three models of cloud service to compare applications: Software as a Service (SaaS) vs (IaaS) Infrastructure as a Service vs (PaaS) Platform as a Service ---> but there are also other public or private services, such as Desktop as a service (DaaS) and Functions as a Service (Faas) or even XaaS (anything as a service — that are all integrated with the on-premises IT infrastructure to create a cohesive ecosystem called the hybrid cloud of embedded systems ; In Practice, The Web 3.0, rather than just searching for content based on keywords or numbers, can use artificial intelligence (AI) and machine learning (ML) to understand the semantics of the content on the web- to enable users to find, share and combine information more easily - " How? " - with the use of techs like Neuro-symbolic machine learning architecture , Artificial Intelligence Neural Network , Blockchain technology , 3D hyper Graphics, Virtual and Augmented Reality network, that allows machines to understand and interpret information. - Many of the recent revolutions in computer networking-including peer-to-peer file sharing, and media streaming-have taken place at the application layer.

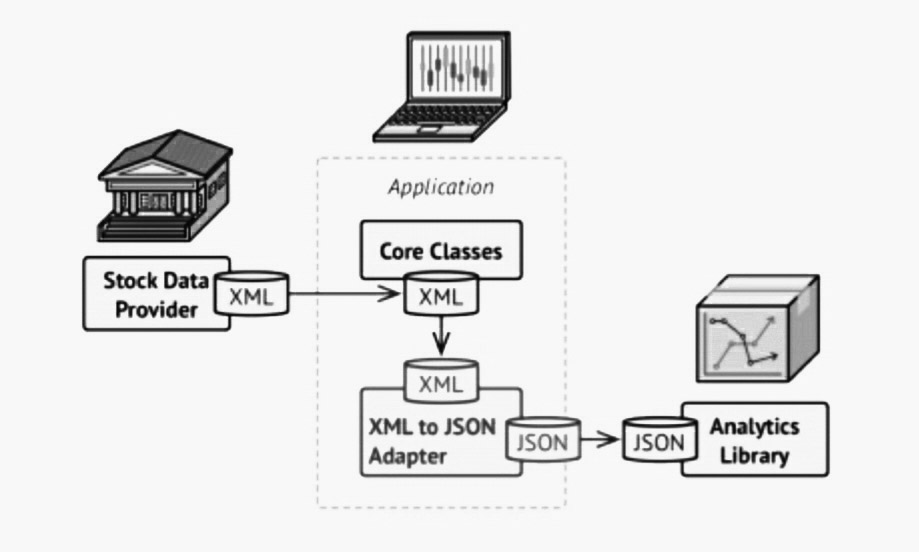

Tokenization vs Encryption - are both used today as methods to secure/protect data stored in cloud services or applications, under different circumstances. The architecture of a blockchain system can be divided into six layers and work as independent systems : data layer, network layer ( virtualized nodes, that connect to the "net operating system"), consensus layer , incentive layer, contract layer, and application layer.

The Web 3.0 was built on three new layers of spatial technological innovation: edge computing, decentralized data networks and artificial intelligence Furthermore, Web 3.0’s spatial internet properties rely on immersive worlds connected via the web. These potentially interoperable worlds will provide information and services across XR platforms.

More importantly, users, including businesses, students, and professionals, can overlay digital content seamlessly on the real world. This creates a spatial relationship to data, allowing both a persistent and always-on connection to the internet. Users can now merge and interact with content in their daily lives, interacting between the physical and virtual worlds in real-time.

Large data centres supplemented by a multitude of powerful computing resources spread across phones, computers, appliances, sensors and vehicles marks a move towards trusting all constituents of a network implicitly, rather than needing to trust each individual explicitly

- submitted to compatible interconnectivity and powered-energized durability dependency ( system of objects network ) - More of it is associated to a world that is growing faster inequality, nationalism, mistrust in traditional government/banking institutions, global warming environment duo to faster trend consumption - such climate impacts of “proof-of-work” cryptocurrency mining, as currencies require miners to compete to validate transactions on their blockchains take power-hungry servers that create air pollution and carbon emissions.

While some are more useful in certain circumstances than others, the configuration, or topology of a network is key to determining its performance , functionality, connectivity and security protection.

2022 || What Are Graph Neural Networks?

2022 || Blockchain Technology and the Metaverse

2022 || Ethical Hacking Lessons

2022 || Security Resources

2022 || CiberSegurança Resources

2022 || Security Resources: Data Security Posture Management (DSPM):What Is It And Why Does It Matter Now?

In Practice, The Web 3.0, rather than just searching for content based on keywords or numbers, can use artificial intelligence (AI) and machine learning (ML) to understand the semantics of the content on the web- to enable users to find, share and combine information more easily - " How? " - with the use of techs like Neuro-symbolic machine learning architecture , Artificial Intelligence Neural Network , Blockchain technology , 3D hyper Graphics, Virtual and Augmented Reality network, that allows machines to understand and interpret information. - Many of the recent revolutions in computer networking-including peer-to-peer file sharing, and media streaming-have taken place at the application layer.

Tokenization vs Encryption - are both used today as methods to secure/protect data stored in cloud services or applications, under different circumstances. The architecture of a blockchain system can be divided into six layers and work as independent systems : data layer, network layer ( virtualized nodes, that connect to the "net operating system"), consensus layer , incentive layer, contract layer, and application layer.

The Web 3.0 was built on three new layers of spatial technological innovation: edge computing, decentralized data networks and artificial intelligence Furthermore, Web 3.0’s spatial internet properties rely on immersive worlds connected via the web. These potentially interoperable worlds will provide information and services across XR platforms.

More importantly, users, including businesses, students, and professionals, can overlay digital content seamlessly on the real world. This creates a spatial relationship to data, allowing both a persistent and always-on connection to the internet. Users can now merge and interact with content in their daily lives, interacting between the physical and virtual worlds in real-time.

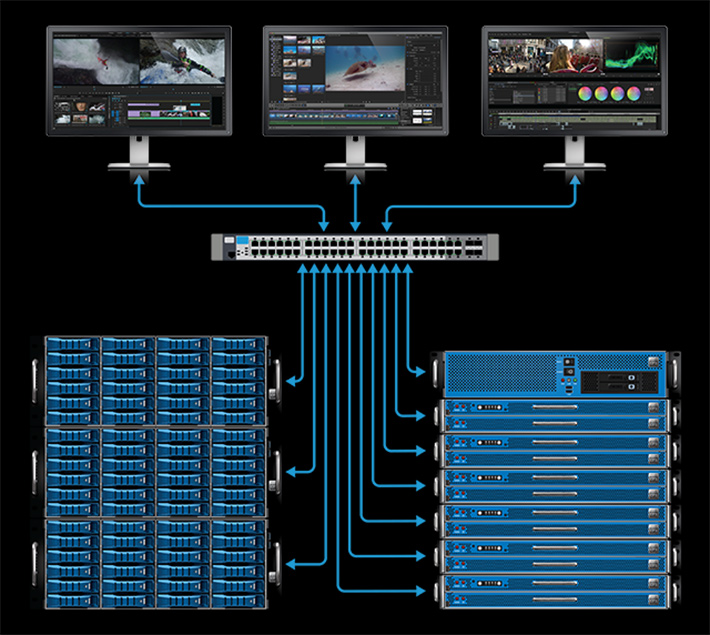

Large data centres supplemented by a multitude of powerful computing resources spread across phones, computers, appliances, sensors and vehicles marks a move towards trusting all constituents of a network implicitly, rather than needing to trust each individual explicitly

- submitted to compatible interconnectivity and powered-energized durability dependency ( system of objects network ) - More of it is associated to a world that is growing faster inequality, nationalism, mistrust in traditional government/banking institutions, global warming environment duo to faster trend consumption - such climate impacts of “proof-of-work” cryptocurrency mining, as currencies require miners to compete to validate transactions on their blockchains take power-hungry servers that create air pollution and carbon emissions.

While some are more useful in certain circumstances than others, the configuration, or topology of a network is key to determining its performance , functionality, connectivity and security protection.

2022 || What Are Graph Neural Networks?

2022 || Blockchain Technology and the Metaverse

2022 || Ethical Hacking Lessons

2022 || Security Resources

2022 || CiberSegurança Resources

2022 || Security Resources: Data Security Posture Management (DSPM):What Is It And Why Does It Matter Now?

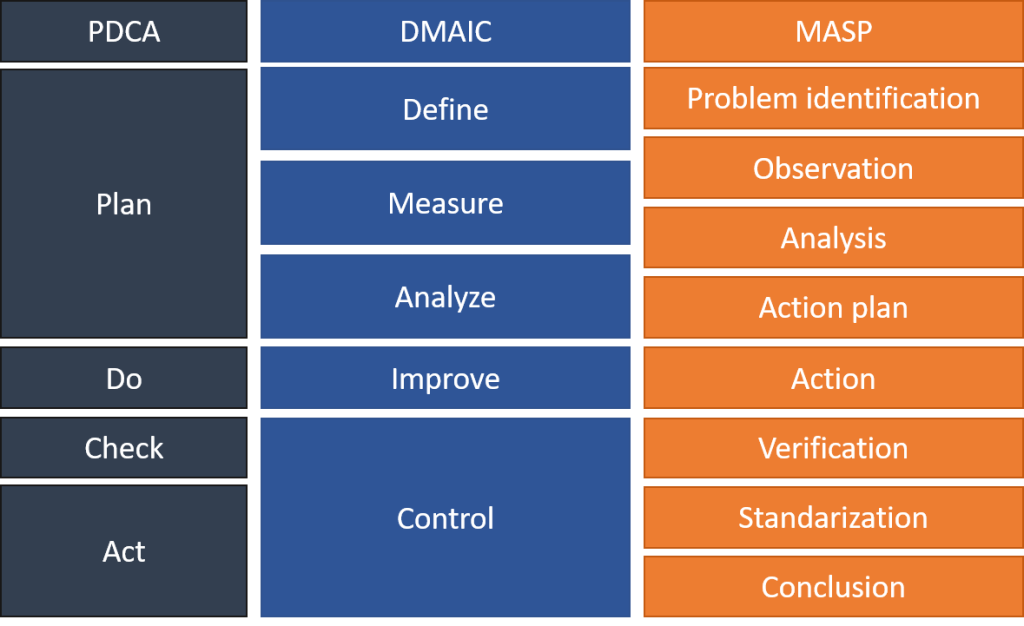

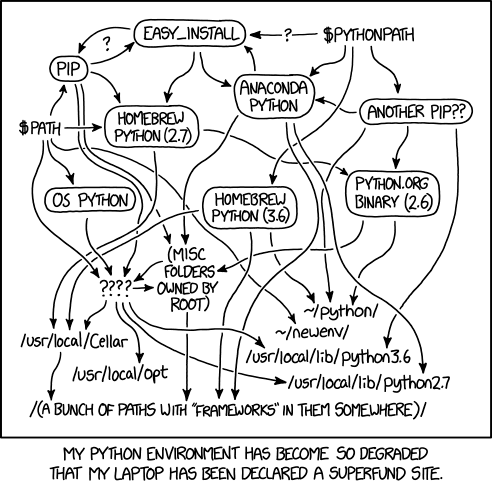

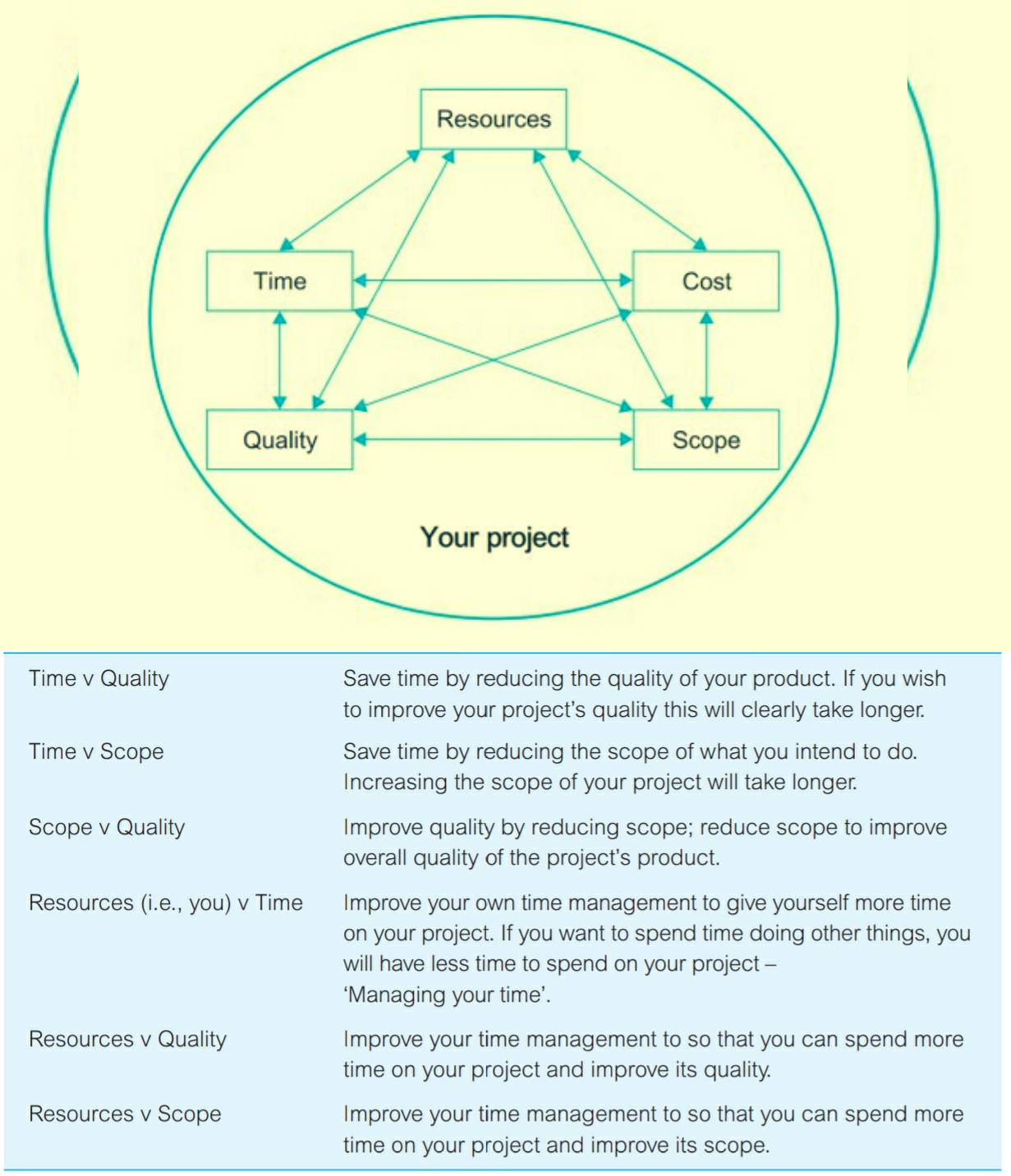

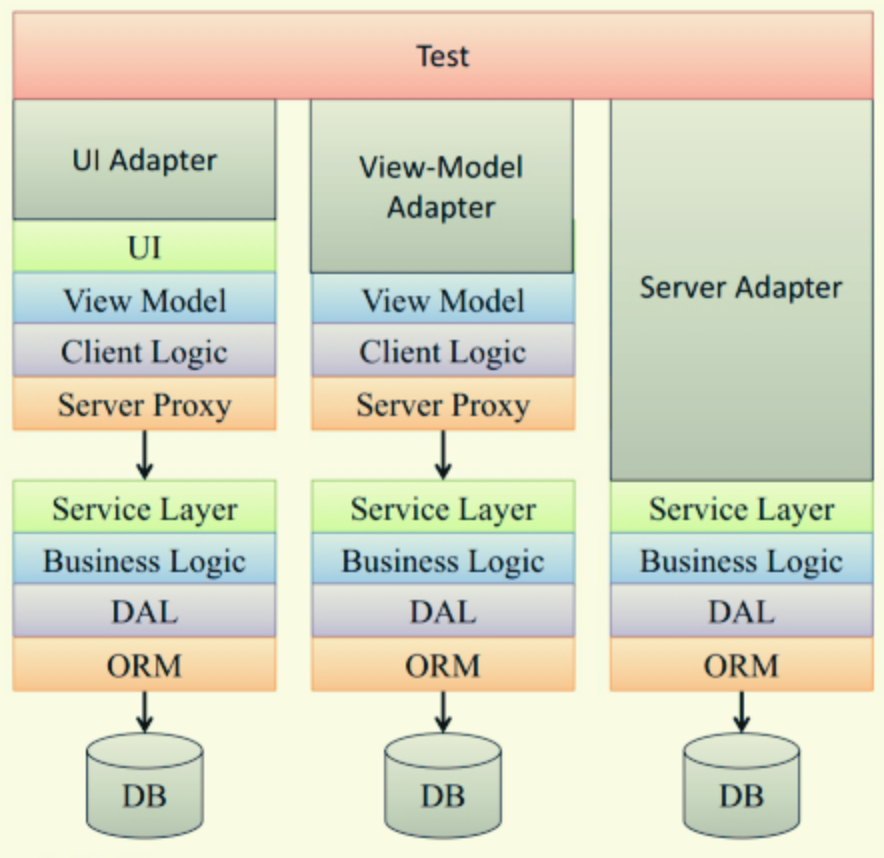

How does Information systems (IS) and information technology (IT) thrive in this fast-changing environment and create systems that withstand time as much as possible? --

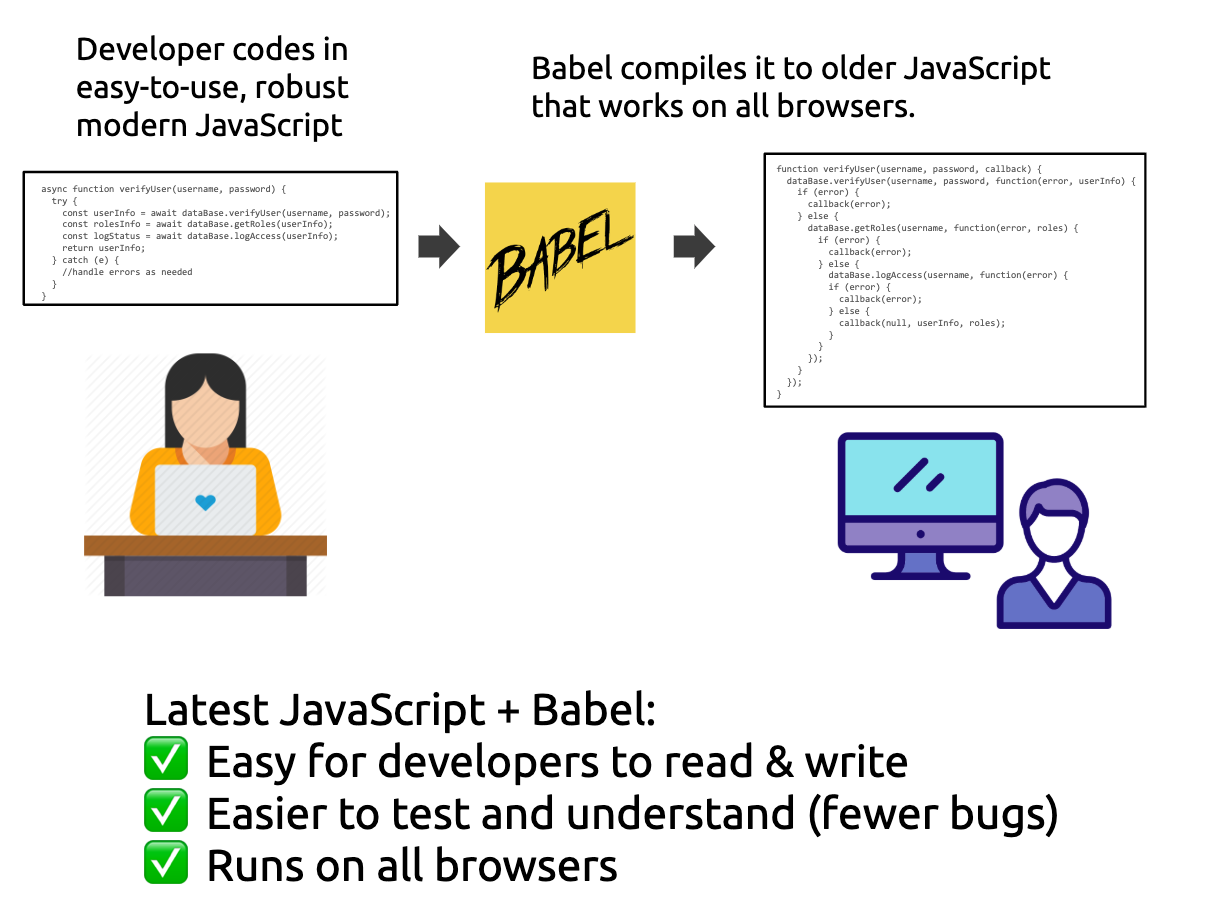

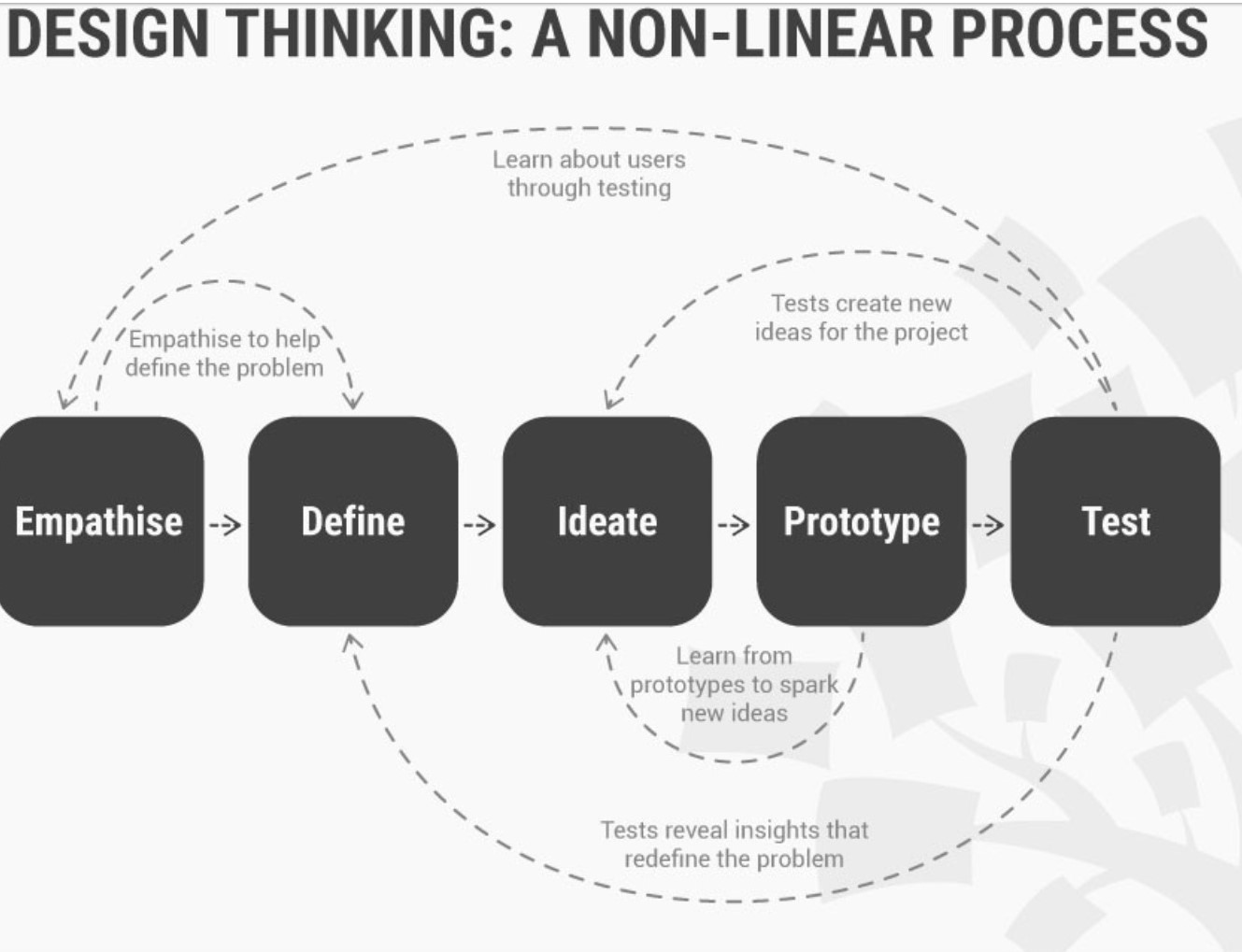

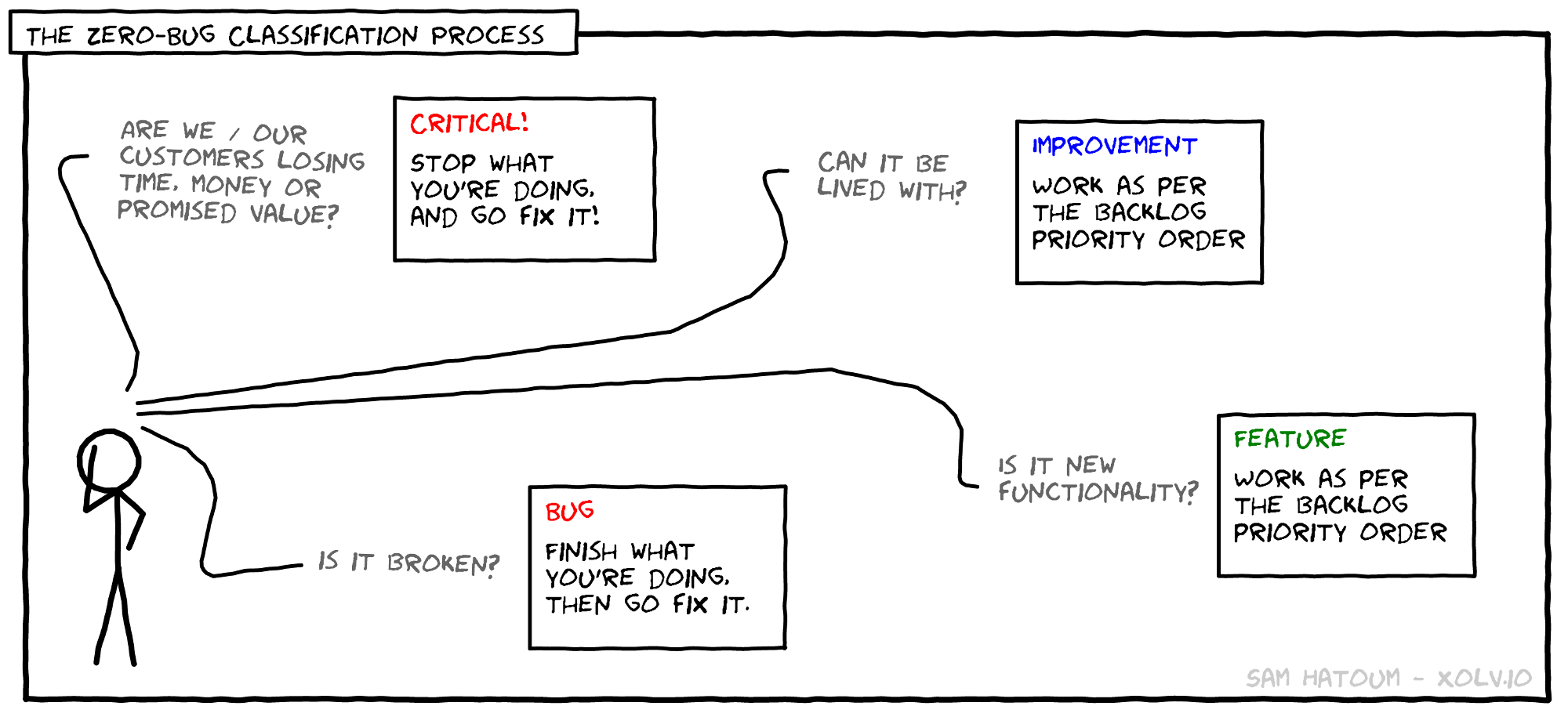

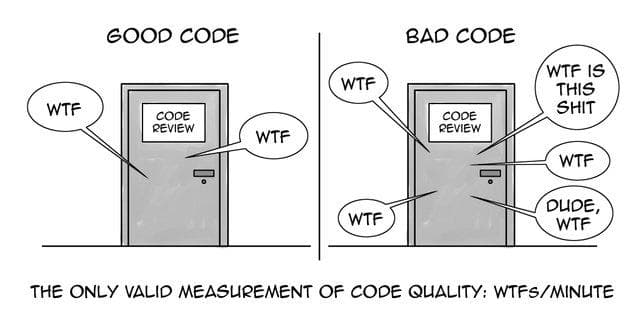

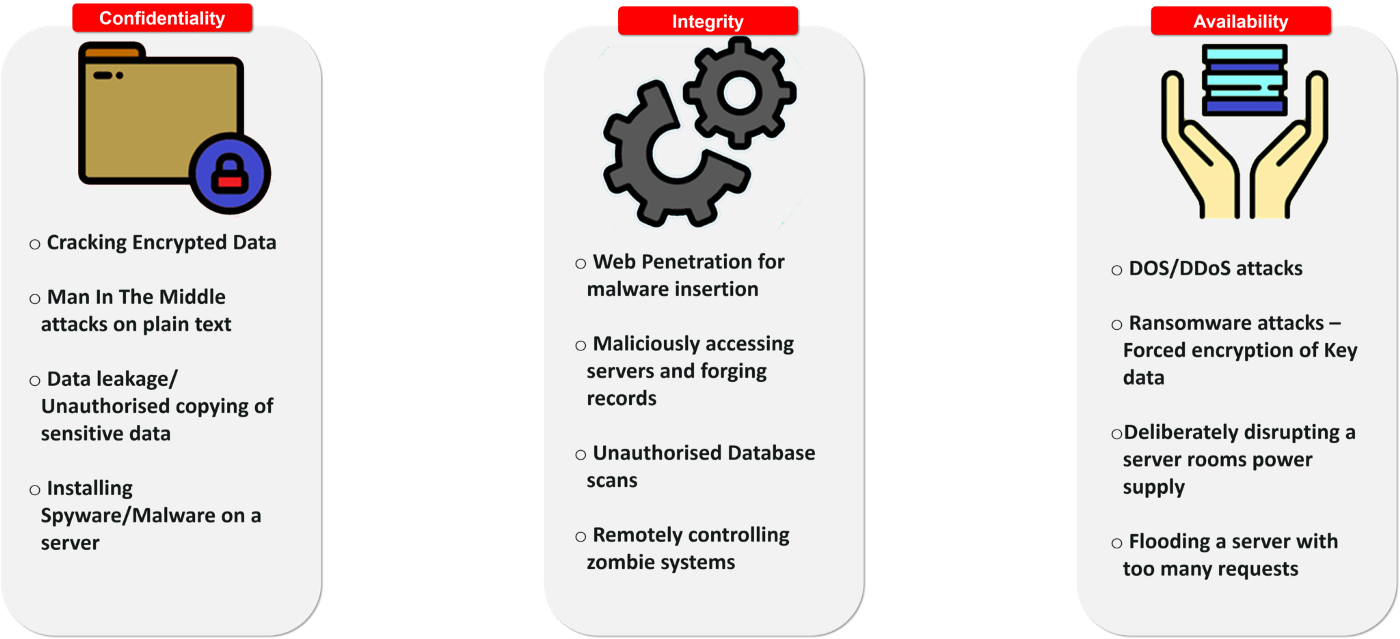

Before learning how to create secure software and supply chain that can include people, companies, natural or manufactured

resources, information, licenses, or anything else required to make your end product, you need to understand several key security concepts such as confidentiality ( keeping data safe), integrity ( data is current, correct and accurate), and availability ( measuring device was unavailable due to malfunction, tampering, or dead batteries / resilience improves availability).

There is no point in memorizing how to implement a concept if you don’t understand when or why you need it. Also, knowing the reason behind security rules and Learning these principles will ensure you make

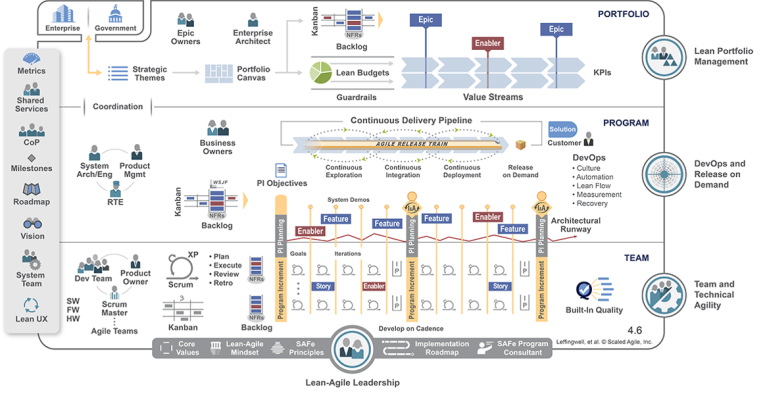

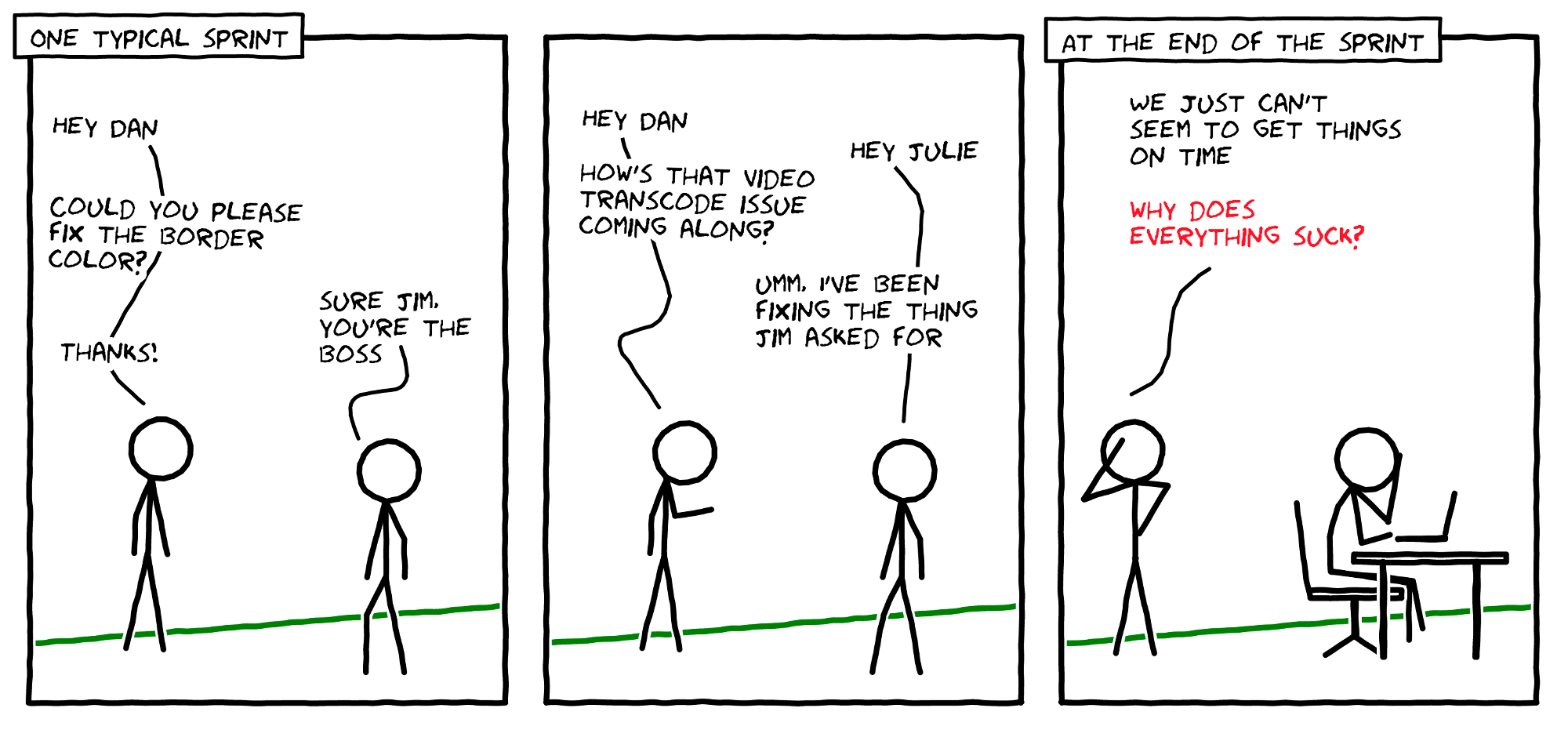

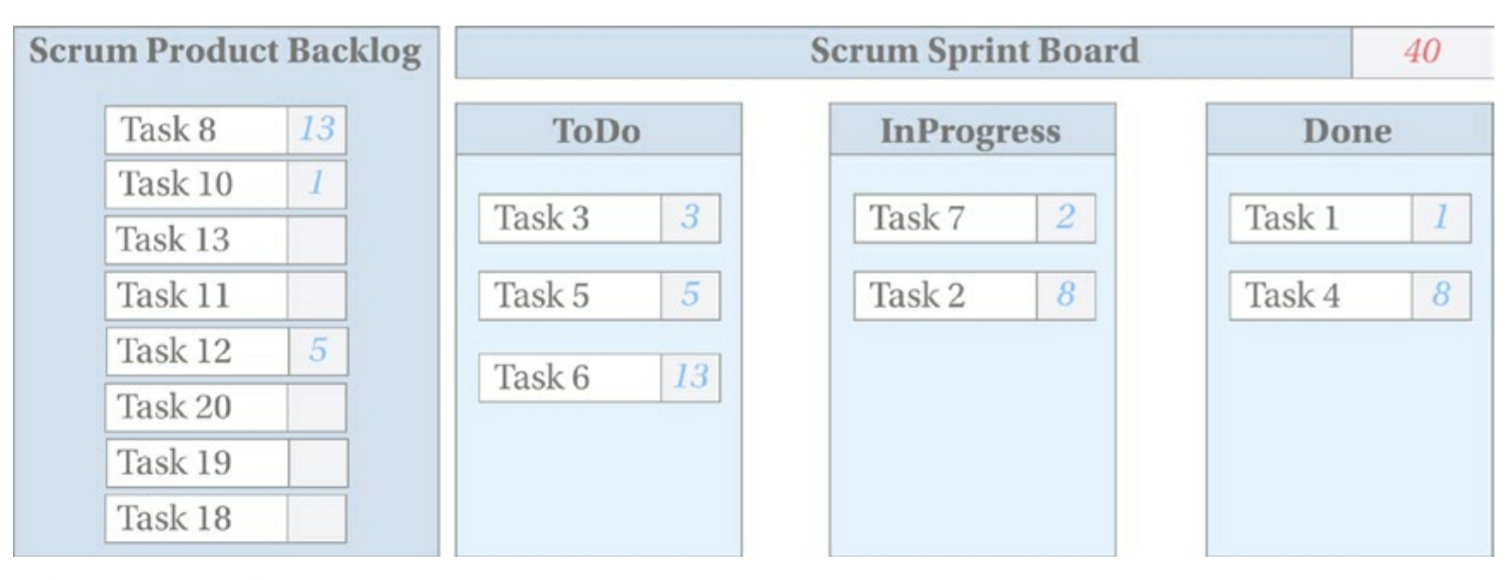

secure project decisions and are able to argue for better security when you face opposition. No matter what development methodology you

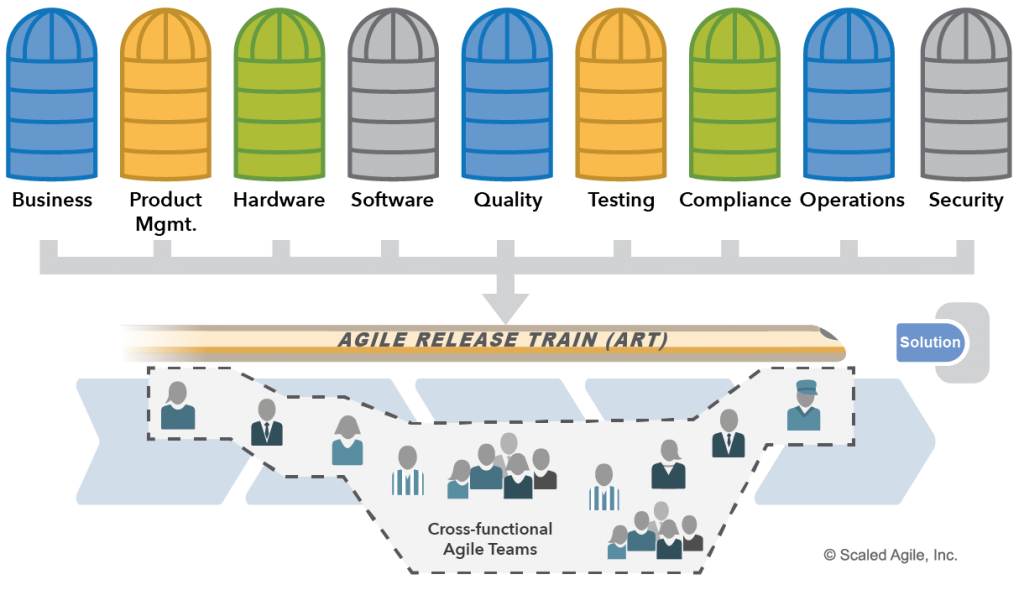

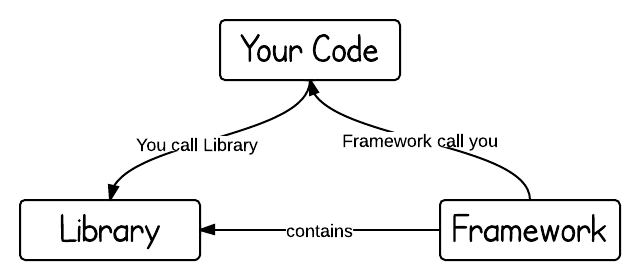

use (Waterfall, Agile, DevOps), language or framework you write it in, or type of audience you serve; without a plan you cannot build something of substance.

There are several ways to arrange a network topology - architectural, political and logical - attempt to experiment with new economic models based on distributed networks, that reflect different pros & cons:

A centralized Single Point Of Failure ( SPOF - divided by 3 categories : Hardware failures, for example, server crashes, network failures, power failures, or disk drive crashes; Software failures, for example, Directory Server or Directory Proxy Server crashes; Database corruption; ) leads to a situation in which one malfunction of the server causes the whole system to shut down, leaving all clients lose their access to the app .

The scalability maintainance of the system is limited to hardware: you can increase the resources of this node by adding more RAM or hard drives (vertical scaling), but you can't add more nodes (horizontal scaling).

How does Information systems (IS) and information technology (IT) thrive in this fast-changing environment and create systems that withstand time as much as possible? --

Before learning how to create secure software and supply chain that can include people, companies, natural or manufactured

resources, information, licenses, or anything else required to make your end product, you need to understand several key security concepts such as confidentiality ( keeping data safe), integrity ( data is current, correct and accurate), and availability ( measuring device was unavailable due to malfunction, tampering, or dead batteries / resilience improves availability).

There is no point in memorizing how to implement a concept if you don’t understand when or why you need it. Also, knowing the reason behind security rules and Learning these principles will ensure you make

secure project decisions and are able to argue for better security when you face opposition. No matter what development methodology you

use (Waterfall, Agile, DevOps), language or framework you write it in, or type of audience you serve; without a plan you cannot build something of substance.

There are several ways to arrange a network topology - architectural, political and logical - attempt to experiment with new economic models based on distributed networks, that reflect different pros & cons:

A centralized Single Point Of Failure ( SPOF - divided by 3 categories : Hardware failures, for example, server crashes, network failures, power failures, or disk drive crashes; Software failures, for example, Directory Server or Directory Proxy Server crashes; Database corruption; ) leads to a situation in which one malfunction of the server causes the whole system to shut down, leaving all clients lose their access to the app .

The scalability maintainance of the system is limited to hardware: you can increase the resources of this node by adding more RAM or hard drives (vertical scaling), but you can't add more nodes (horizontal scaling).

On the other hand, the idea of Web3 Decentralization could enable a web system controlled by many entities ( local private Key ) without centralized corporation servers ( Hosted Keys ), where every client would contribute to the web (like a peer-to-peer network). The system is still vulnerable to failures and attacks (account takeover, digital identity theft; apps that contain an overly aggressive monetization library collect extensive details from users’ devices; money launderin and hacking) , but the impact is proportional to the number of master nodes impacted. The integrity of the data, to a large extent, depend on the data management capability and functionality of the repository : nodes with more computational resources than the rest of the network , dominates the verification approval of transactions and controls the content of a blockchain - it can outpace all other nodes, manipulate the blockchain, insert fraudulent transactions, double-spend funds, or even steal asset from others.

It's also more scalable because you can add more nodes (scale horizontally and vertically ) that have the same control over the system ( BitTorrent as a peer-to-peer file sharing protocol).

Historically, socially and politically have been powerful in sustaining an ideology of the network market as a non-coercive coordination mechanism withi Decentralized finance. Governments and corporations are increasingly pursuing a reconstruction of money as a system of control and surveillance machine. However, markets require significant legal and ideological enforcement to function in practice, often with substantial systemic coercion.

A better understanding of the power and limitations of each network is necessary - it would allow for hybrid approaches and less on what it can achieve. Local vs Hosted Key aren't immune to malware, virus, worms and other attacks - There are Pros and Cons for scenarios where you need to upgrade - - nor distributed network / decentralisation dichotomy automatically yield an egalitarian, equitable or just social-economic-political resolution, in practice with ambitions for rearranging power dynamics.

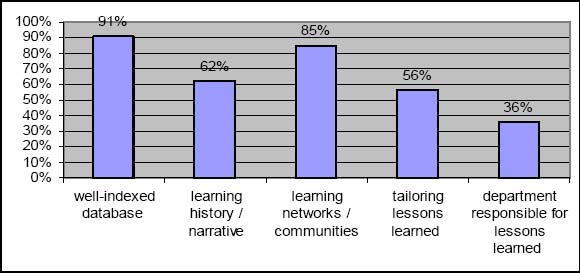

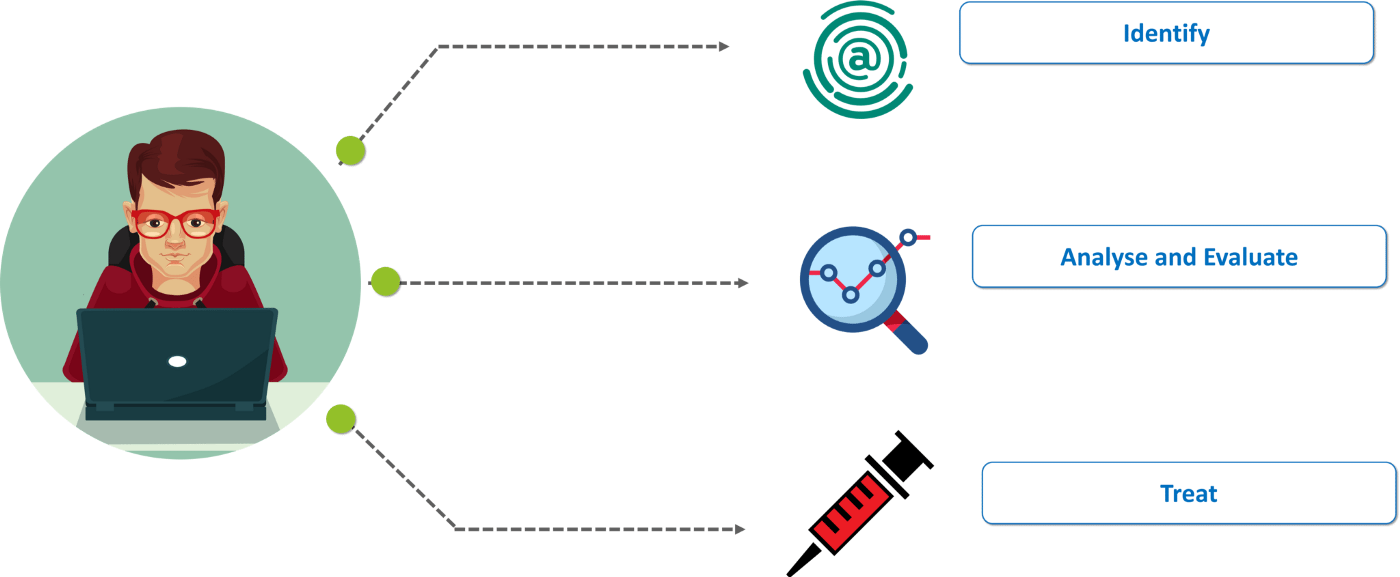

The increasing threats to our information, users, and systems from cyber criminals, urges several training solutions to help organizations educate staff as an essential part of running a healthy organization.

Employees are the weakest link in the entire cybersecurity system. The safety training is a must-have in all companies.

The slightest distraction may lead to a serious security breach . The security strategy and awareness of internal resources, with the Investment in training is crucial for the success of any security policy and should be carried out not only in theory, but also in practice, in the workplace.

Observability and security are key to closing vulnerability gaps: Modern multiclouds help organizations innovate faster, but drive complexity and vulnerabilities ; Incomplete observability solutions are creating big gaps in capability. Observability powered by answers and intelligent automation is key to closing those gaps. Here too, IT Managers should know identify whether they have all the necessary skills needed to train their teams or resort to an external partner that ensures the consultancy in this area and make the assessment and audit, on the basis of which the following will be defined: technology platforms, technology processes, management and operation, mitigation and crisis management.

Cyber risks are business risks. A network intrusion can cause lasting harm to your organization and its stakeholders. It can also affect your reputation, your people, and even your community.

THE INCREASINF THREATS - IDENTITY & SECURITY | 2022 :

With these three basic motions: more software, cloud technology, and DevOps practices, digitalization has become an intrinsic element of nearly all organizations.

However, the requirement for extra software raises the security threshold, making it difficult to safeguard digital assets. Cloud computing entails the use of cutting-edge technology with varying hazards, as well as the elimination or reframing of the idea of a secure perimeter.

When some IT and infrastructure risks are shifted to the cloud and others are specified on a software basis, the risks will be mitigated while reinforcing the need for permission and access.

" In this regard, Kubernetes has emerged as the de-facto standard for cloud-native container orchestration. Since the launching of Google Cloud’s Google Kubernetes Engine (GKE) in 2014, a radical shift to the cloud-native system has occurred in the software environment."

___ As Google realized the potential of a new search engine platform for the growing Internet and transfered it's virtual knowledge into hardware devices, developing a network with it's own operating system - Alongside smartphones and web services - it became a place where people could search the repository of human knowledge, communicate, perform work, consume media, and maneuver the endlessly;

Google also began working on experimental hardware - Reshaping the world by linking, sorting and filtering data into a number of nearly incomprehensible size ;

Representing, the infinite amount of information that it provides through different services. Meanwhile, algorithms using artificial intelligence are discovering unexpected tricks to solve problems that astonish their developers, raising concerns about our ability to control them. Unlike traditional computer programs, AIs are designed to explore, make decisions and develop novel approaches to tasks (It wouldn’t be so, without DeepMind) - So we began to Question : " How is it possible for an AI system to "outsmart" its human masters? " ---- Since information exchange is increasingly happening online and optimized through interconnected technologies , business prosper the trade-offs between the central principles and values - these were issues discussed at the GDG Devfest : It began focused on how Tech resources could be used for the benefit of society towards the improvement of collective sustainable ecosystems

- able to create systems that enforce and guarantee the rights that are necessary to maintain a free and open society.

Ecosystems can be symbiotic or parasitic : ecological relationship cocreate value, capable of shaping the market ; wherever economics serve the people and not otherwise - bridging the 'discursive gap' between policy text and practice, unleashes social innovation for societal benefit.

"How can technology and innovation become more inclusive?" - On the one hand, there is a wide gap between the pace of law and technology - on the other, there are also different types of Democracy and interpretation views.

When the physicist Stephen Hawking told an audience in Portugal during a talk at the Web Summit technology conference in Lisbon, Portugal, in which he said, “computers can, in theory, emulate human intelligence, and exceed it.”

he was alerting that AI’s impact could be cataclysmic unless its rapid development is strictly and ethically controlled: “Unless we learn how to prepare for, and avoid, the potential risks,” he

explained, “AI could be the worst event in the history of our civilization.” Hawking explained that to avoid this potential reality, creators of AI need to “employ best practice and effective management.”

- "Is Your AI Ethical? " - Responsible A.I. Has a Bias Problem, and Only Humans Can Make It a Thing of the Past: As more company’s adopt A.I., more issues will surely come to the forefront.

Many business are already working toward making changes that will stop A.I. problems before they go any further. It relies on several key technologies, such as machine learning, natural language processing,

rule-based expert systems, neural networks, deep learning, physical robots, and robotic process automation. Some AI applications have moved beyond task automation but still fall well short of context awareness.

Responsible AI is defined as the integration of ethical and responsible use of AI into the strategic implementation and business planning process:

transparent and accountable AI solutions that create better service provision. Such solutions harness, deploy, evaluate and monitor AI machines, thus helping to maintain individual trust and minimize privacy invasion.

“As A.I. systems get more sophisticated and start to play a larger role in people’s lives, it’s imperative for business to develop and adopt clear principles that guide the people building, using and applying A.I.” ;

“We need to ensure systems working in high-stakes areas such as autonomous driving and health care will behave safely and in a way that reflects human values.”

ARTICLE I : Breaking the Echo Chamber: How Clickbait, Algorithms, and Unethical Journalism Divide Us

ARTICLE II: The Current State and Future of AI: Benefits, Breakthroughs, and Safety

On the other hand, the idea of Web3 Decentralization could enable a web system controlled by many entities ( local private Key ) without centralized corporation servers ( Hosted Keys ), where every client would contribute to the web (like a peer-to-peer network). The system is still vulnerable to failures and attacks (account takeover, digital identity theft; apps that contain an overly aggressive monetization library collect extensive details from users’ devices; money launderin and hacking) , but the impact is proportional to the number of master nodes impacted. The integrity of the data, to a large extent, depend on the data management capability and functionality of the repository : nodes with more computational resources than the rest of the network , dominates the verification approval of transactions and controls the content of a blockchain - it can outpace all other nodes, manipulate the blockchain, insert fraudulent transactions, double-spend funds, or even steal asset from others.

It's also more scalable because you can add more nodes (scale horizontally and vertically ) that have the same control over the system ( BitTorrent as a peer-to-peer file sharing protocol).

Historically, socially and politically have been powerful in sustaining an ideology of the network market as a non-coercive coordination mechanism withi Decentralized finance. Governments and corporations are increasingly pursuing a reconstruction of money as a system of control and surveillance machine. However, markets require significant legal and ideological enforcement to function in practice, often with substantial systemic coercion.

A better understanding of the power and limitations of each network is necessary - it would allow for hybrid approaches and less on what it can achieve. Local vs Hosted Key aren't immune to malware, virus, worms and other attacks - There are Pros and Cons for scenarios where you need to upgrade - - nor distributed network / decentralisation dichotomy automatically yield an egalitarian, equitable or just social-economic-political resolution, in practice with ambitions for rearranging power dynamics.

The increasing threats to our information, users, and systems from cyber criminals, urges several training solutions to help organizations educate staff as an essential part of running a healthy organization.

Employees are the weakest link in the entire cybersecurity system. The safety training is a must-have in all companies.

The slightest distraction may lead to a serious security breach . The security strategy and awareness of internal resources, with the Investment in training is crucial for the success of any security policy and should be carried out not only in theory, but also in practice, in the workplace.

Observability and security are key to closing vulnerability gaps: Modern multiclouds help organizations innovate faster, but drive complexity and vulnerabilities ; Incomplete observability solutions are creating big gaps in capability. Observability powered by answers and intelligent automation is key to closing those gaps. Here too, IT Managers should know identify whether they have all the necessary skills needed to train their teams or resort to an external partner that ensures the consultancy in this area and make the assessment and audit, on the basis of which the following will be defined: technology platforms, technology processes, management and operation, mitigation and crisis management.

Cyber risks are business risks. A network intrusion can cause lasting harm to your organization and its stakeholders. It can also affect your reputation, your people, and even your community.

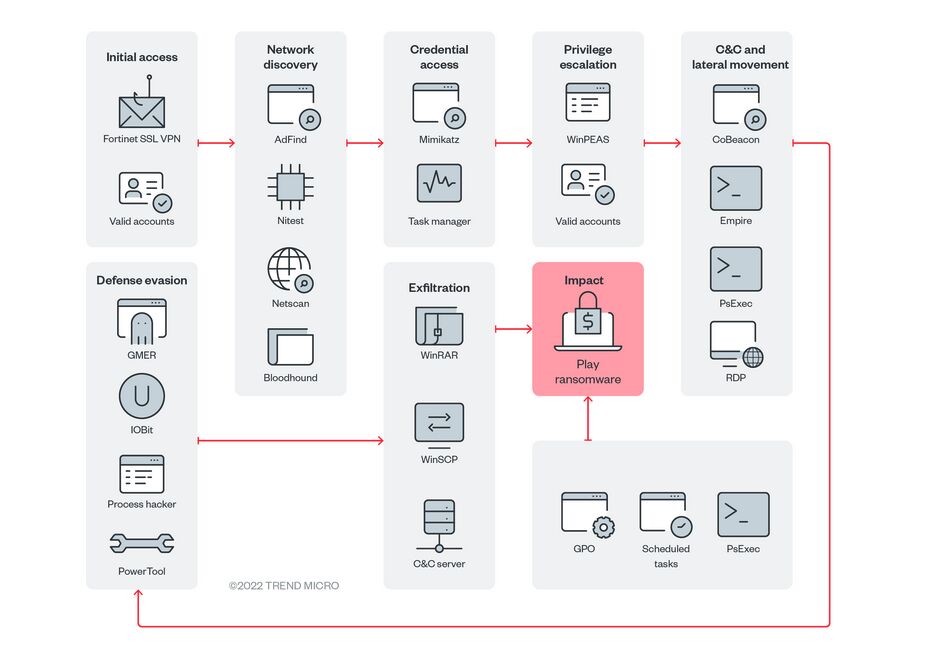

THE INCREASINF THREATS - IDENTITY & SECURITY | 2022 :

With these three basic motions: more software, cloud technology, and DevOps practices, digitalization has become an intrinsic element of nearly all organizations.

However, the requirement for extra software raises the security threshold, making it difficult to safeguard digital assets. Cloud computing entails the use of cutting-edge technology with varying hazards, as well as the elimination or reframing of the idea of a secure perimeter.

When some IT and infrastructure risks are shifted to the cloud and others are specified on a software basis, the risks will be mitigated while reinforcing the need for permission and access.

" In this regard, Kubernetes has emerged as the de-facto standard for cloud-native container orchestration. Since the launching of Google Cloud’s Google Kubernetes Engine (GKE) in 2014, a radical shift to the cloud-native system has occurred in the software environment."

___ As Google realized the potential of a new search engine platform for the growing Internet and transfered it's virtual knowledge into hardware devices, developing a network with it's own operating system - Alongside smartphones and web services - it became a place where people could search the repository of human knowledge, communicate, perform work, consume media, and maneuver the endlessly;

Google also began working on experimental hardware - Reshaping the world by linking, sorting and filtering data into a number of nearly incomprehensible size ;

Representing, the infinite amount of information that it provides through different services. Meanwhile, algorithms using artificial intelligence are discovering unexpected tricks to solve problems that astonish their developers, raising concerns about our ability to control them. Unlike traditional computer programs, AIs are designed to explore, make decisions and develop novel approaches to tasks (It wouldn’t be so, without DeepMind) - So we began to Question : " How is it possible for an AI system to "outsmart" its human masters? " ---- Since information exchange is increasingly happening online and optimized through interconnected technologies , business prosper the trade-offs between the central principles and values - these were issues discussed at the GDG Devfest : It began focused on how Tech resources could be used for the benefit of society towards the improvement of collective sustainable ecosystems

- able to create systems that enforce and guarantee the rights that are necessary to maintain a free and open society.

Ecosystems can be symbiotic or parasitic : ecological relationship cocreate value, capable of shaping the market ; wherever economics serve the people and not otherwise - bridging the 'discursive gap' between policy text and practice, unleashes social innovation for societal benefit.

"How can technology and innovation become more inclusive?" - On the one hand, there is a wide gap between the pace of law and technology - on the other, there are also different types of Democracy and interpretation views.

When the physicist Stephen Hawking told an audience in Portugal during a talk at the Web Summit technology conference in Lisbon, Portugal, in which he said, “computers can, in theory, emulate human intelligence, and exceed it.”

he was alerting that AI’s impact could be cataclysmic unless its rapid development is strictly and ethically controlled: “Unless we learn how to prepare for, and avoid, the potential risks,” he

explained, “AI could be the worst event in the history of our civilization.” Hawking explained that to avoid this potential reality, creators of AI need to “employ best practice and effective management.”

- "Is Your AI Ethical? " - Responsible A.I. Has a Bias Problem, and Only Humans Can Make It a Thing of the Past: As more company’s adopt A.I., more issues will surely come to the forefront.

Many business are already working toward making changes that will stop A.I. problems before they go any further. It relies on several key technologies, such as machine learning, natural language processing,

rule-based expert systems, neural networks, deep learning, physical robots, and robotic process automation. Some AI applications have moved beyond task automation but still fall well short of context awareness.

Responsible AI is defined as the integration of ethical and responsible use of AI into the strategic implementation and business planning process:

transparent and accountable AI solutions that create better service provision. Such solutions harness, deploy, evaluate and monitor AI machines, thus helping to maintain individual trust and minimize privacy invasion.

“As A.I. systems get more sophisticated and start to play a larger role in people’s lives, it’s imperative for business to develop and adopt clear principles that guide the people building, using and applying A.I.” ;

“We need to ensure systems working in high-stakes areas such as autonomous driving and health care will behave safely and in a way that reflects human values.”

ARTICLE I : Breaking the Echo Chamber: How Clickbait, Algorithms, and Unethical Journalism Divide Us

ARTICLE II: The Current State and Future of AI: Benefits, Breakthroughs, and Safety

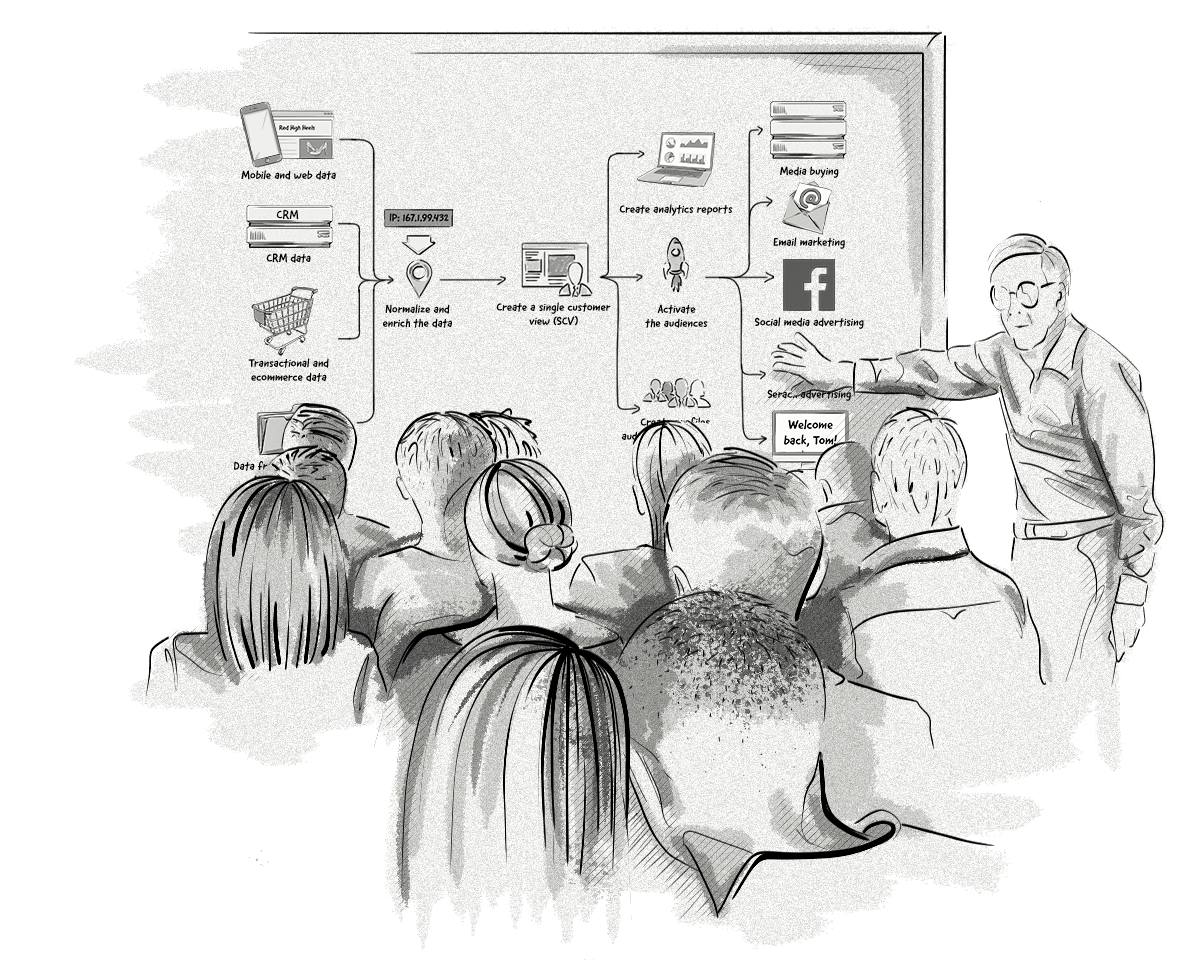

The event held the debate on major technological forces, currently driving to digital disruption on the medium cloud, social Movements on mobile, Big Data, while IoT are Transforming Physical Security in the Digital Age . It´s not just Netflix or Facebook’s that feed drives views, privileging the stimulus–response loop recommendation system / optimizing for engagement as a key interface for content consumption. Yet this same design has been criticized for leading users towards more extreme content, other online platforms like YouTube, shape users’ world views considering new rules for the artificial intelligence programs, spreading malicious content. ---------> "What Are The Alternatives to the Danger of engagement-focused algorithms - that are Hacking the Brain with deep psychology stimulation inside preferences (neuromodulation "tuned" to activate) through “emotional surveillance technology”? : In a world where Citizens are not products, clients or customers - rather reshape public human rights , and the Economy represents a tool we humans invented - like democracy and politics - to help govern our relationships to adapt between each other - ourselves with nature and the world we live in. If these tools aren't getting the outcomes that make us happy, safe, healthy, better educated and protecting / preparing our country for an increasingly uncertain future , as quality of life is stagnating; unfair , jobs and health education systems, regardless of how much money you have or where you live; while our environment is suffering, then it's time our economic tools and practices change to embrace transformative policies that allow the data analyze to improve future business decisions and reprioritise our investments. This is where the rights approach to ethical decision making comes in, although fairness can be subject to different definitions across divergent languages, cultures and political systems : “stipulates that the best ethical action is that which protects the ethical rights of those who are affected by the action. It emphasizes the belief that all humans have a right to dignity” (Bonde and Firenze, A Framework for Ethical Decision Making). Technologies appear to achieve the same goal: to make the city more efficient, connectivity, and social harmony from a “healthy body” into an “efficient machine - signaling technologies that transformed the city into a programmed and programmable entity, a machinery, whose behavior can be predicted, controlled and modulated according to the principles established by some well- intentioned technocrat . However, the use of AI within many industries - retail to supply chain - from banking to finance, manufacturing to operations - security predictive artificial intelligence (AI) threat detection ( Identifies and blocks deep-learning based malware detection ) or deep learning acceleration built directly into the chip, Intel® hardware (-architecture, accelerators, memory, storage, software, security -) have all changed the way industries operate. In social media environments - digital marketers, created a new way to connect and engage with the target audience or media marketing performance. In oposition, it raised ethical concerns and eventually carries the risk of attracting consumers’ distrust - extracting harmful marketing appeals, lack of transparency, information leakage and identity thef. Developing AI solutions should consider human rights, fairness inclusion, employment and equality that can lead to potential gains in credibility for products and brands - ensure brand safety and protect consumers from fraud and the dissemination of fake information, thus increasing customer trust towards brands. Recognizing the value of sensitive data and the harm that could be caused if certain data were to fall into the hands of the wrong parties, many governments and industries have established laws and compliance standards by which sensitive data must be Pseudonymized or Anonymized. Europe is putting pressure on internet companies like Facebook and Google to safeguard against hate speech, trolling, cyber-bullying, fake news, sex traffickers online and terrorist activities online. The GDPR (general data protection regulations act - on MAY 4 2020) passed by the parliament of the EU aims to safeguard the data privacy rights of its citizens. While the act combined with the EU court’s “Right to be forgotten” judgment has set precedence in the way companies handle the data of their consumers. Individuals now have the "right of data portability", the "right of data access" along with the "right to be forgotten" and can withdraw their consent whenever they want , as well as intrusive online brand presence.

" Social media marketing is in transition as AI and analytics have the potential to liberate the power of social media data and optimize the customer experience and journey. Widespread access to consumer -

generated information on social media, along with appropriate use of AI, have brought positive impacts to individuals, organisations, industries and society " (Cohen, 2018).

" Social media marketing is in transition as AI and analytics have the potential to liberate the power of social media data and optimize the customer experience and journey. Widespread access to consumer -

generated information on social media, along with appropriate use of AI, have brought positive impacts to individuals, organisations, industries and society " (Cohen, 2018).

-- Considering the conscious principles of compromise, chain potentially relevant questions about General Data Protection Regulations :: " How are organisations ensuring that the content posted by staff and consumers does not compromise the ethical principles of the brand - managing their social media presence in line with data protection, visual misinformation and privacy regulations ? ; What do you need to protect : on whatever occasion the adversary gains access to information that is sensitive to you? What are the risks of compromise and how to mitigate them ? What practices and mechanisms can enable firms to cultivate an ethical culture of AI use / How can digital marketing professionals ensure that they utilize AI to deliver value to the target customers with an ethical mindset? "

- --> The importance of getting interested in areas of privacy law, digital legislation and regulation of AI ethics, is recognizing digital literacy education that serves to take responsibility in civics and citizenship towards the environmental impacts of human-machine technology relationships, but also protect and question oneself values critically, bridging the discursive gap between policy text and practice into a re-formed conceptions of learning, creativity and identity in the new machine age. Examples of Seeking capital - information, social, and cultural Individuals - are applied whenever companies join uses of artificial intelligence for recruitment or machine-learning systems like In the process of seeking various types of capital through digital marketing platforms, consumers experience both positive (benefits) and negative (costs ) effects. Also, Filtering Perception and Awareness ( munitions of the mind) - starting with stone monuments, coins, broadsheets, paintings and pamphlets, posters, radio, film, television, computers and satellite communications - have been present throughout history, as propaganda has had access to ever more complex and versatile media. The velocity of information flow, volume of information shared, network clusters and cross-posts on different social media may be analyzed and compared for negative and positive electronic word-of-mouth. These intra-interaction consequences such as consumers’ cognitive, emotional, and behavioral engagement with the brand thus trigger extra-interaction consequences of brand trust and attitude thus developing brand equity through the DCM strategy. While it articulates and builds the digitally enabled capabilities required to transform their linear supply chains into connected, intelligent, scalable, customizable, and nimble digital supply networks through Synchronized planning, Intelligent supply Smart operations Dynamic fulfillment -- Digital development supply networks (Hyper-connectivity, Social networking, Cognitive computing where Matlab /excel and python can transform raw sound data into numeric data for machine learning - training accuracy for deep Learning Models - "This means that if your data contains categorical data, you must encode it to numbers before you can fit and evaluate a model. The two most popular techniques are an integer encoding and a one hot encoding, although a newer technique called learned embedding may provide a useful middle ground between these two methods." Cloud computing combined with software-as-a-service (SaaS) delivery models , 3D printing. The use of customer analytics to make smarter business decisions that generate more loyal customers. ensure how customers are having positive experiences with the company at all levels, including initial brand awareness and loyalty - crucial to the success of any business. This often leads to confusion ( 'discursive gap' ) about when and how to deploy what information technology, to maximize value creation opportunities during stages of the customer journey - usually questioning : " What is the interplay between customer traits (e.g. innovativeness, brand involvement, technology readiness) and attributes of technological platforms in this process? What firm capabilities are required to capture, manage and exploit these innovation opportunities from customers to gain a deeper understanding of them?" ---- Since There are different Types of Data: Nominal, Ordinal, Discrete, Continuous, Interval and Ratio scale -- The Netflix’s dynamic optimizer example, attempts to improve the quality of compressed video, but gathers data - initially from human viewers - and uses it to train an algorithm to make future choices about video transmission, with the aim to deliver personalized and targeted experiences to multiscreen audiences to keep them coming back - . Netflix’s algorithm library is vast but much of the content is geographically restricted due to copyright agreements - - the movies and TV shows are limited by the country. Whenever traveling abroad you may need a VPN to securely access your usual home streaming services. Because not all Netflix shows are available worldwide, many of its subscribers turn to VPNs that disguise their location and fool the streaming service into offering them a content catalog for a different region but Netflix algorithms ban most of them. Not all VPNs work with Netflix. Due to rapid growth of the digital devices and their access to the Internet caused security threats to user data; while advance measures have been adapted by the attackers, security and privacy threats has become more and more sophisticated day by day, increasing the demand for an updated technical Skills and highly secure medium to secure entities and their valuable information into the Internet. "Netflix’s machine learning algorithms are driven by business needs." |

AI is progressing in Broadcast & Media, through some mainstream applications , to uncover patterns that aren’t always intuitive to human perception and able to change consumer behaviours - the two most viewer-centric applications would be on content discovery and content personalization : Netflix’s new AI tweaks each scene individually to make video look good even on slow internet - It also tracks the movies we watch, our searches, ratings, when we watch, where we watch, what devices we use, and more. In addition to machine data, Netflix algorithms churn through massive amounts of movie data that is derived from large groups of trained movie taggers ; Google Is Using Artificial Intelligence to Make Sure YouTube Content Safer for Brands . It uses Deep learning, to build artificial neural networks to mimic the way organic(living) brains sort or process information, applying AI in a number of areas. |

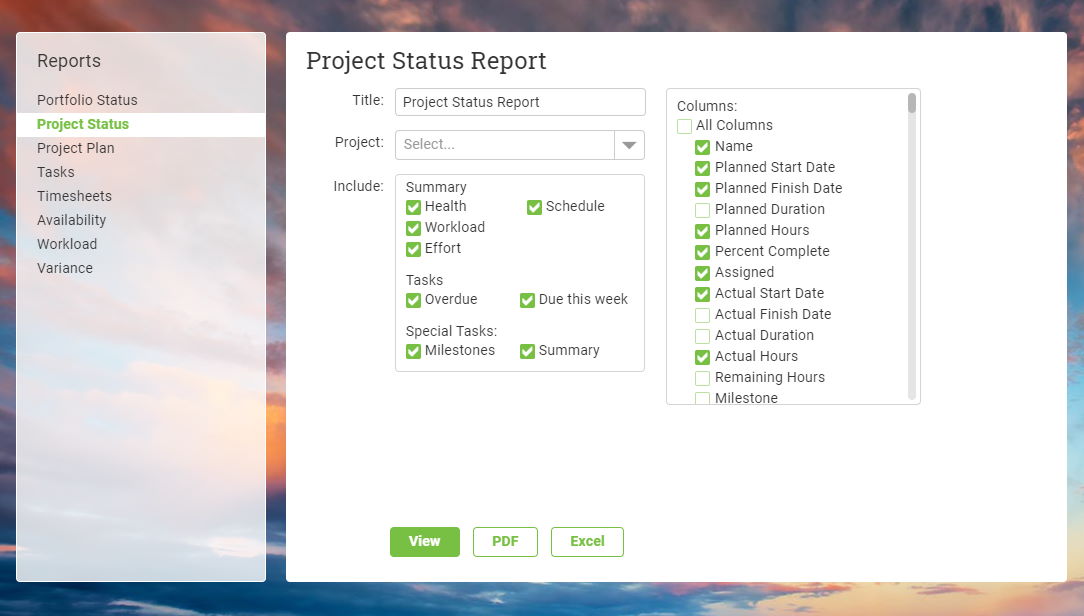

| There are dozens of reporting features and metrics to review web services. With Search Console you can monitor, maintain, and troubleshoot your presence in Google’s Search results and be aware of any warnings or issues that come directly from Google. On a technical level, Google Analytics works through JavaScript tags that run in your website’s source code and is usually operated with Google Tag Manager - – these JavaScript tags running Google Analytics set cookies on their browsers that harvest personal and sometimes sensitive data from them in return. The question arises : " Is Google Analytics GDPR compliant to use? How do you balance Google Analytics, cookies and end-user consent on your website?" Google Tag Manager is a hugely popular tool for websites of any size and shape. It organizes all third-party tags on your website (like Google Analytics or Facebook pixels), and it also controls when these are triggered. Important for website owners to know, is that almost all of such “third party tags” will set cookies that, according to EU law (the GDPR), fall into categories that require the explicit prior consent of your users. In other words, tags are what happens, while triggers are when what happens. Inceptionv3 is a convolutional neural network for assisting in image analysis and object detection, and got its start as a module for Googlenet — especially shader programming in the Graphics Library Shader Language (GLSL). RESEARCH COLLECTION | 2020 : Connect Google Analytics to Google Data Studio |

Onwards — The next era of spatial computing and how Google allows us to experience 3D & augmented reality in Search : the user experience is still a primary obstacle for AR mass adoption and the biggest obstacle for VR mass adoption too ; as it is gradually gaining influence on automobile industry - In the future , people will have access to information via glasses, lenses or other mobile devices ; autonomous vehicles, drones and robots move freely environments - understanding where they are; where they are going and what is around them -. By solving the problem of inaccurate positioning from GPS to camera-enabled Scape’s VPS long term vision, many of the applications once imagined by AR developers, are now a reality and It's expected to AR revenues surpass VR revenues by 2020 - Knowadays , almost everyone owns a cellphone. Plus, mobile phones have upgraded to the required hardware for AR technology including CPU, sensors, and GPU - enabling infrastructure for a vast array of new spatial computing services , accelerated by the imminent arrival of widespread 5G networking and edge compute, delivering massive bandwidth at extremely low latency .

RESEARCH COLLECTION | 2020 - Web VR Experiments with Google

LEARNED LESSONS | 2020: Estruturas WebXR

"Customer experience with Digital Content refers to a customer’s perception of their interactive and integrative participation with a brand’s content in any digital media. " - (Judy & Bather, 2019) -

In addition to adding Augmented Reality to the product value, Microsoft has been offering MSOffice applications for its HoloLens device and showing what future offices can look like without screens and hardware. This could also point to new virtual competitors. AR apps can serve as a further direct-to-consumer channel. Some unanswered questions that are both theoretically and managerially relevant are: "How does it impact consumer-brand relationships, for instance, if consumers 3D-scan branded products and replicate them as holograms? How do consumers interact with virtual products in their perceived real world, compared to real products - what advantages and disadvantages do consumers see ? Which dynamic capabilities drive the success of Augmented Reality Marketing? Which competencies do Augmented Reality marketers need? How should these requirements be integrated into digital marketing curricula to lead for better decisions and lower return rates? How should Augmented Reality Marketing be organized and implemented - How does good content marketing or good storytelling - inspirational user experiences - are organized? What drives the adoption of Augmented Reality? What advantages and disadvantages do consumers see in virtual versus real products ? How can the success of Augmented Reality Marketing be measured?"_______________ At the End of the Event I suggested Filipe Barroso

( responsible for organizing the Lisbon Google Developer Groups Event ) that it would be invaluable to get in touch with programming schools like ETIC that we all could engage into future educational workshops together - intersecting areas and interact with the events. For a person who is learning it was important to interconnect: students expressed opened to initiatives that included group and teamwork contexts - sharing knowledge and opportunities to grow - .

When I spoke to Filipe Barroso, I felt it would be a good initiative for all - to generate revenue and bridging the gap between knowledge seeking and sharing an opportunity for self-discovery and personal development. Eventually, other initiatives starting with this GOOGLE event spread on the same direction , so web development / media-programming colleagues, brought up a bridge together event # Code in the Dark - merging different courses in a workshop google event. I felt willing, because it taught me more than programming skills that any good proggeammer strives, such as coding(20%) ; algorithmic thinking (30%) ; computer science and software engineering concepts (25%) ; languages and software technologies (25%) : The importance of these Soft skills and proactive initiatives also from others, which I learnt from during this process, was for me more about "Sharing" in "Effective Interpersonal Relationships": aware that all social movements, are about others, how to learn with each other as a team; co-working towards mutual success and not towards an individual behaviour. This is what I understand to be the qualities of teamwork and #the road to create value. When studying programming, soft skills come at use in a practical way - job collective satisfaction beyond a collective co-working perspective range.

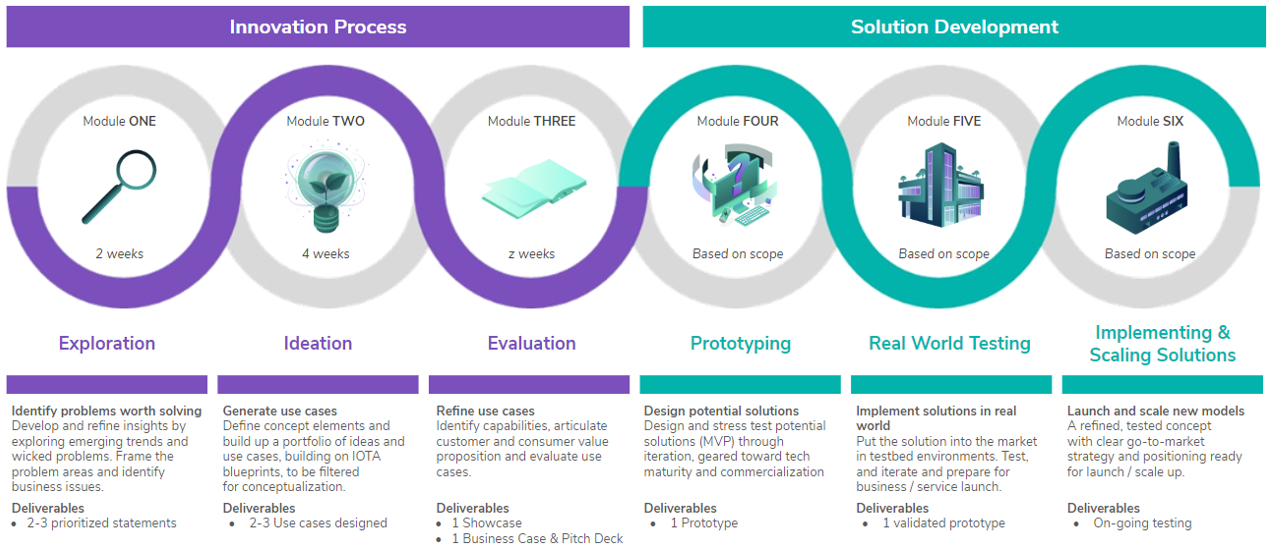

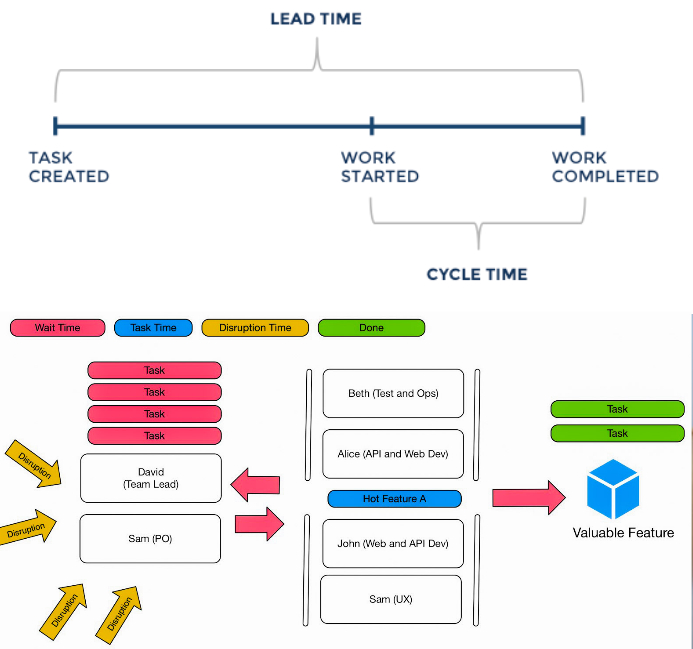

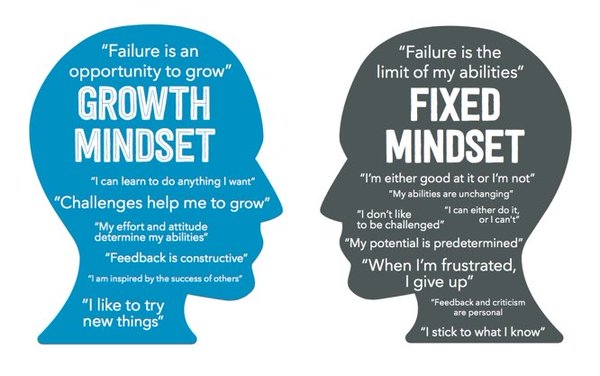

Intersecting Personal Experiences - I got to the conclusion that Technology itself continues to evolve creating performance and productivity opportunities for business and even reshape the way we imagine social network as it interacts with the future. Tech and IT Organizations suffer from constant changes due to hardware evolution - this pushes competition between industry sectors - What elements are needed in the Digital supply network transformation methodology: • What does digital supply chain look like ( vision )? • What is the implementation roadmap - what are the value drivers and dependencies ? • What is the project plan for piloting a digital thread and cost ( ROI opportunities ) ? • How long will it take to scale up, reconfigure the existing supply chain processes , Prioritization that mitigate risks?~ That's why I understand the importance of educational skill upgrades inside tech associations and organizations [ Professional development , through courses or training ]. Skill upgrade should not be ignored if it really wants to make business-market differences. That is also the main fact why I have pursued knowledge in different Educational systems. In a world of constant transformation, it is important to stay up to date and try different approaches. You get to a point realizing solution's that disable our ability to grow and innovate will not work to survive. Even Though, I witnessed a kind of resistance to CPD - Continuing Professional Development (CPD) – Continuing Education – Some companies feel threatened or create resistence ( encounter reluctance) - having hard time in understanding on how corrosive This attitude could turn out to be for every coworkers productivity and creativity: it only retrogrades growth and creates miss understandings or internal conflicts that could be easily solved with Education and shared perspectives. Training can also become a means of altering behavior, not in a punitive way but so that gaps inorganizational performance can be closed. Redifining the value is not just about profit maximization issues, but sustainable growth towards the measure of value ( Value Creation vs. Revenue Extraction) . The concept of training has many more aspects than just learning a skill: : Productivity; Quality; Empowerment of intellectual property; Alignment & Teamwork; Liability; Risk reduction; Professional development that supports employees in gaining a wider perspective in theirjobs and in their personal lives ; evaluates what level of performance will be required to assist the organization inachieving its goals; Establishes a strategy to meet current and future needs; Determines where gaps currently exist between the existing performance andthe required performance - Preparing for Change through Knowledge Sharing; Business Conduct and Social Responsibility. The Reduced cost comes with improve quality , for example, reducing the amount of rework and returns. Similarly, we reduce cost when we raise productivity or decrease lost time due to accidents.

Just like The best stories have interesting characters that have been put into a difficult situation, I learned with past projects that the path to ensure growth and create value in a business ecosystem is a process that integrates consistent elements - such as , resilient; security / compliance; interoperabile ; flexible scaling : combining with the right tools for the team to grow from a human and professional point of view. Important tools, that allow development teams to deliver software or other projects at an ever-increasing pace without compromising quality , because sometimes it can be easy to lose oneself in overwhelming routine . Cultivating a Knowledge-Sharing Culture- properly documenting failures also makes it easier for other people (present and future) to know what happened and avoid repeating history - To avoid erasing tracks instead, light them up! Informed individuals are less likely to panic as they understand what’s going on and how to respond appropriately. They’re more likely to prepare and prevent disasters when they understand the real risks that they might face — to improve your security wisely, to maximize the impact, and the metrics you’ll need to make decisions, set goals and track progress. Software designers, developers, and architects are constantly confronted with the same confounding problem: how to design software that is both flexible and resilient amid change. The more connected, proactive or knowledge sharing, higher the quality exchange between employees and more productive / healthy workplaces - . Why is it so important being organized and have a balanced legal / liable business plan ? Those who own "brick-and-mortar " operations, must understand liability and how it affects them. Unfortunately, accidents happen all the time. Understanding liability, conducting trainings, getting proper coverage, and other steps can act as a shield against financial claims. As you work to make your business safe and prevent accidents. If a client is unhappy with deliverables, timelines, or even the outcome of the project, he could file a lawsuit based on your management service agreement / contract . Upgrade, migrating knowledge ecosystems will deliver reliable, legal, faster performance to all staff. It's also important to put inside the clients skin: Consistency determines rather they decide to stay with contract or walk away towards other services.

"If you're facing error, call it version 1.0 and keep trying! "

When I look back to this event, even though I wasn't totally prepared to understand some concepts, it did make sense later. This is the process of knowledge: to realize that even if something does not make sense back then , it will eventually connect in the Future. There are alternative ways to connect - You don’t have to follow traditional advice or go to events to successfully build and maintain a valuable network. Most are mixing bowls for professionals who are there for different reasons. While attending , I realized how Millennium Professionals don't like the idea of meetings, but contradictorily waste amounts of time on more expensive events without a good return on their investment of time and money. An activity should be meant to increase the value of your network and/or the value you contribute to it. Proper networking is about building new relationships and deepening your existing ones.

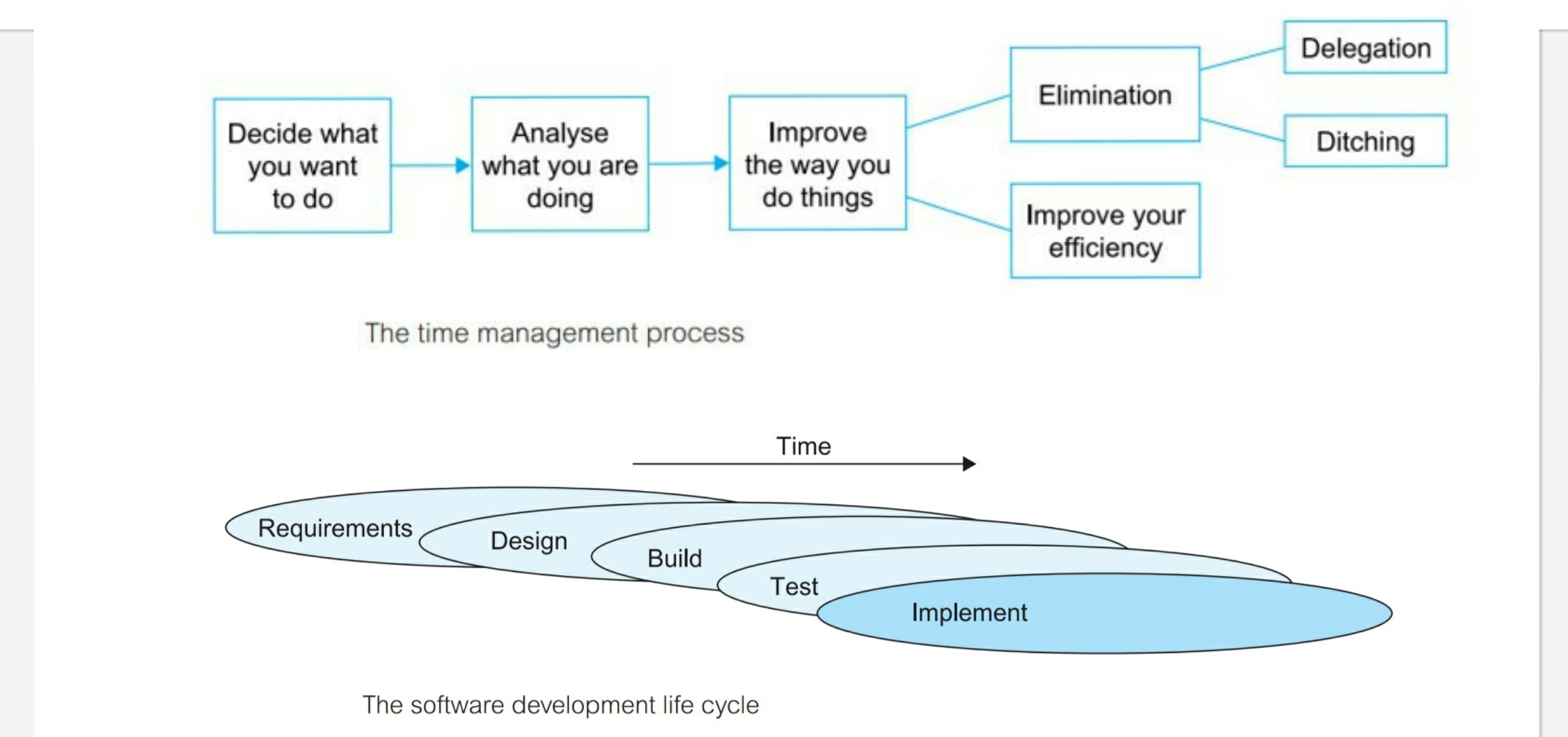

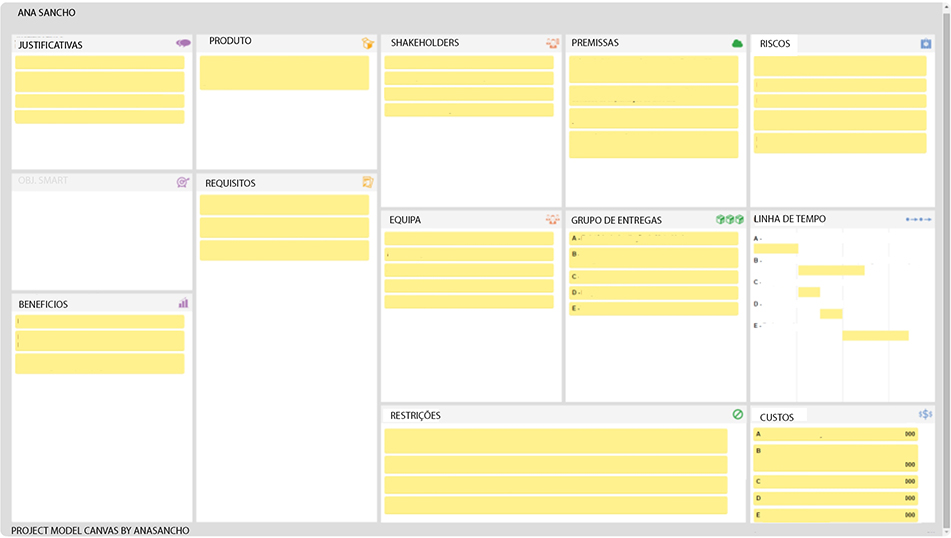

Communication in teams is equivalent to the neural network of the human body. Technology that supports collective interaction include online discussion boards and mailing list. So even after the google event, I opened a channel online - on our Computer Science SLACK called "eventos_tech" - the virtual space, where I shared all that I learnt in group, as an incentive to my colleagues sensibilize towards the importance of exchanging knowledge and being there to help the other - the notion of shared workshops and events on tech , creates or motivates towards other bigger challenges. It was also important to understand how Thee lack of an adequate project scope to contextualize the project so that it does not become dispersed or mispercept by teamwork members and even future clients; underestimating the time and effort required to deliver a task can turn a challenging project into a hellish project. Without clarity and vision we’re unfocused, going nowhere fast.Another thing to consider is that the way people learn and digest information is much different from what it was in the past: Every person will have his/her own way to learn - their own learning process : a time to absorb information and a time to be able to learn how to decode , inter-connect and re-create ideas - respecting this is understanding oneself - to be aligned with your consciousness . Change is about alowing transformation of ideas. Reenforce knowledge , comes with consistently learning - Go back to the start - relearn and try it out - implement data , sharing experiences ; teaching others after learning it, in a clearer way. What lacks proper hierarchical structure needed for learning a subject matter thoroughly, because this could lead to your being stuck quite frequently. In short, you will not have the know-how—the comprehensive knowledge—you need to use that language as a tool—. Workshops and interactive educational programs help people understand themselves ; be in contact with the market; sharing and gripping the right knowledge : It’s these type of events that lead to opportunity. My advice is to Get involved in new activities; lead with curiosity ; Talk to new people ; Explore new subjects that you’ve wanted to knowmore about; Think about small experiments thatyou can run in order to learn and create opportunities to thrive both personally and professionally. In the end , It is up to you to make the world a better place - rediscover views from an intersectional lens. It takes a proactive plan to bring your views to light. Surround yourself with people who value the perspectives and insights you have to offer as a multicultural professional. All too often, we ignore or actively change who we are in order to feel accepted into a group, a team or organization and we lose ourselves in the process; who truly see yourpotential and whose opinion you value to form a success team. The best way to move from a state of powerlessness to empowerment isto have a clear understanding of what you want personally and professionally, how it impacts your life, and what you need to do to attain it. Plans don't have to be elaborate or complicated. "What is one thing you want to change in your life? What do you need to do to get there?" - Once you get clear on what you want to transform,you find the psychological ease that comes with feeling aligned with your goals and ideals ; personal growth and development is an automatic key to making a difference in life : you can make conscious decisions to either continue along or use these current to move in a different direction. Without the conversion of putting theory into practice, time is lost, resources are wasted, and lessons are not learned. It's becoming harder to retain knowledge without it being applied regularly. Developers need "soft skills" like the ability to learn new technologies, communicate clearly with management and consulting clients, negotiate a fair hourly rate, and unite teammates and coworkers - nurturing gratitude - in working toward a common goal. Throughout the years, I learned not to fear my own opinion, even if others do not understand / disagree decision making. I don't fear Failure and rather prefer to start from the beginning or unveil "key" strategies, because I believe in the capacity to overcome obstacles. There's nothing wrong in finding a better version of oneself . Acknowledging your strengths and weaknesses rather apply the learnings from each experiment in future efforts. Error can teach us more about a problem that we might not have seen before and prepare to identify risks : by identifying / understanding where the project fails upgrades the impact of effective risk management and compliance (Key To Innovation) on project success. This doesn't mean I keep repeating the same failures all over again, but instead, commit myself to encounter knew challenging ones , so I can learn more throughout the process of measuring / tracking sequences of keystrokes, that reconfigure awareness networks.

------> Google Developer Groups (GDGs) are for developers who are interested in Google's developer technology; everything from the Android, Chrome, Drive, and Google Cloud platforms, to product APIs like the Cast API, Maps API, and YouTube API.

💻🔒 CYBERSECURITY AND SUSTAINABILITY 📚 - THE DYNAMIC DUO -

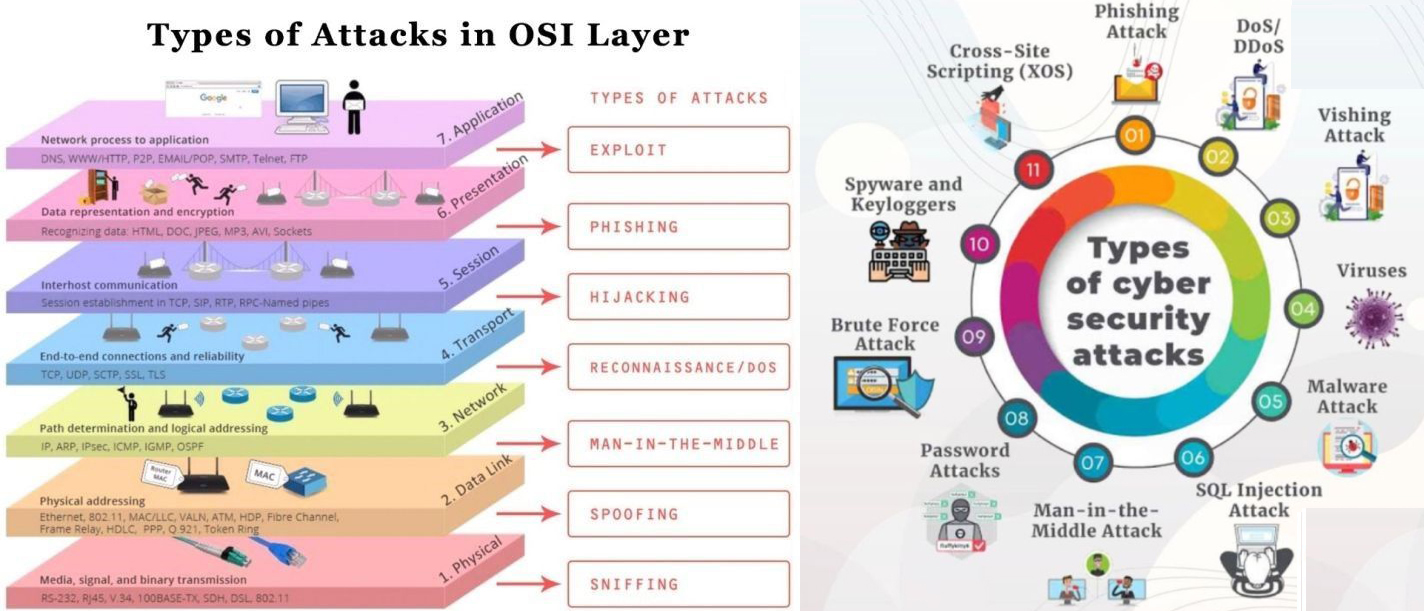

📅 TYPES OF ATTACKS - THREATS LAUNCHED AGAINST CLOUD SERVICES - | Lx Feb, 2023

Securing cloud environments is complex; one misconfiguration can lead to major data breaches. Misconfigurations cause 80% of data breaches, and human errors could account for up to 99% of cloud failures by 2025. These attacks apply to all cloud service types (SaaS, IaaS, PaaS). Organizations must implement strong security measures. Cybersecurity, sustainability, and AI combine to create a potent synergy. Robust cybersecurity safeguards critical infrastructure, ensures data privacy, and uses AI for threat detection and sustainable development. This synergy aims for a tech-driven future with reduced environmental impact and secure digital systems. How cybersecurity and sustainability are interconnected and how artificial intelligence (AI) can contribute to this dynamic duo?

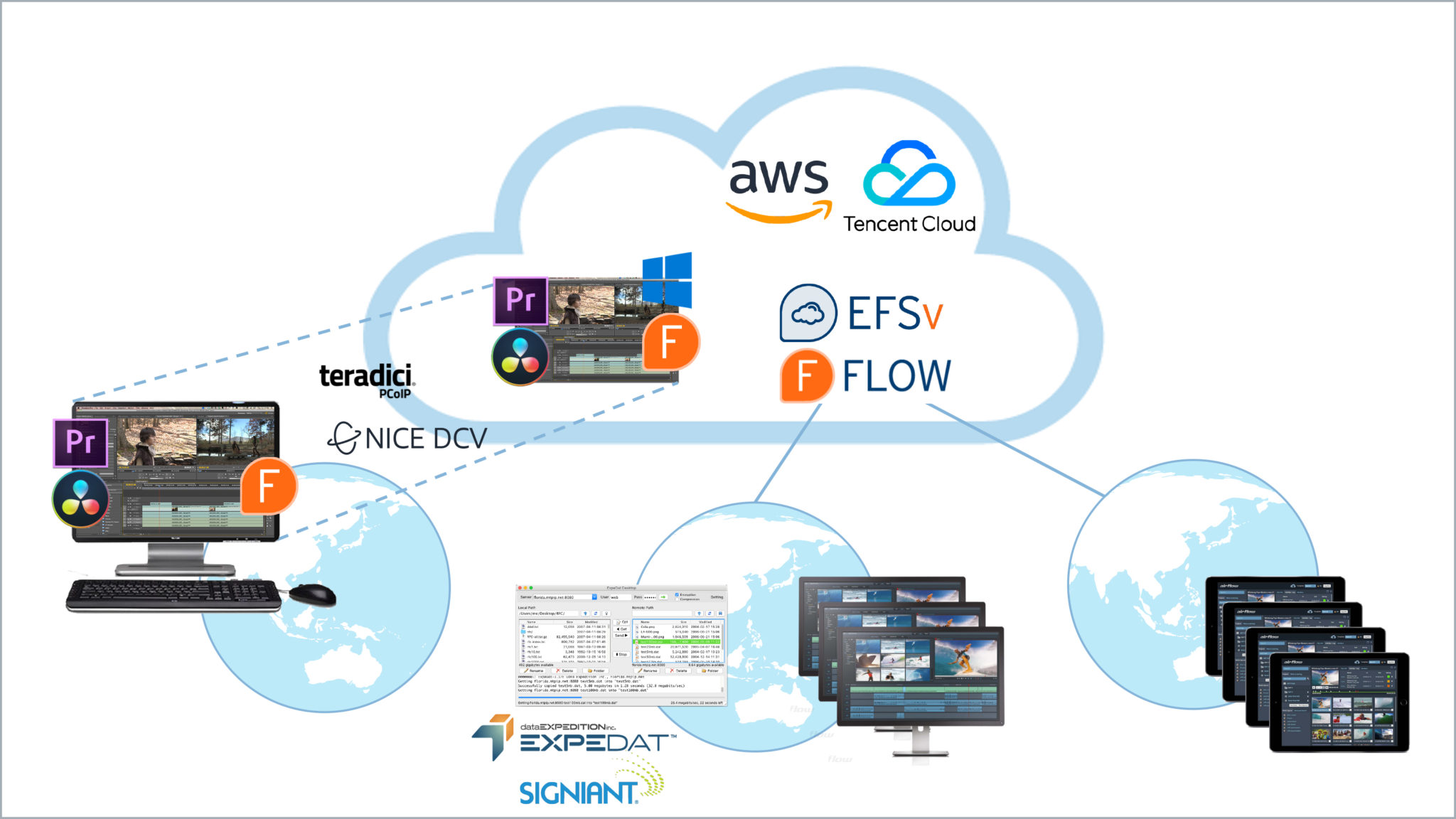

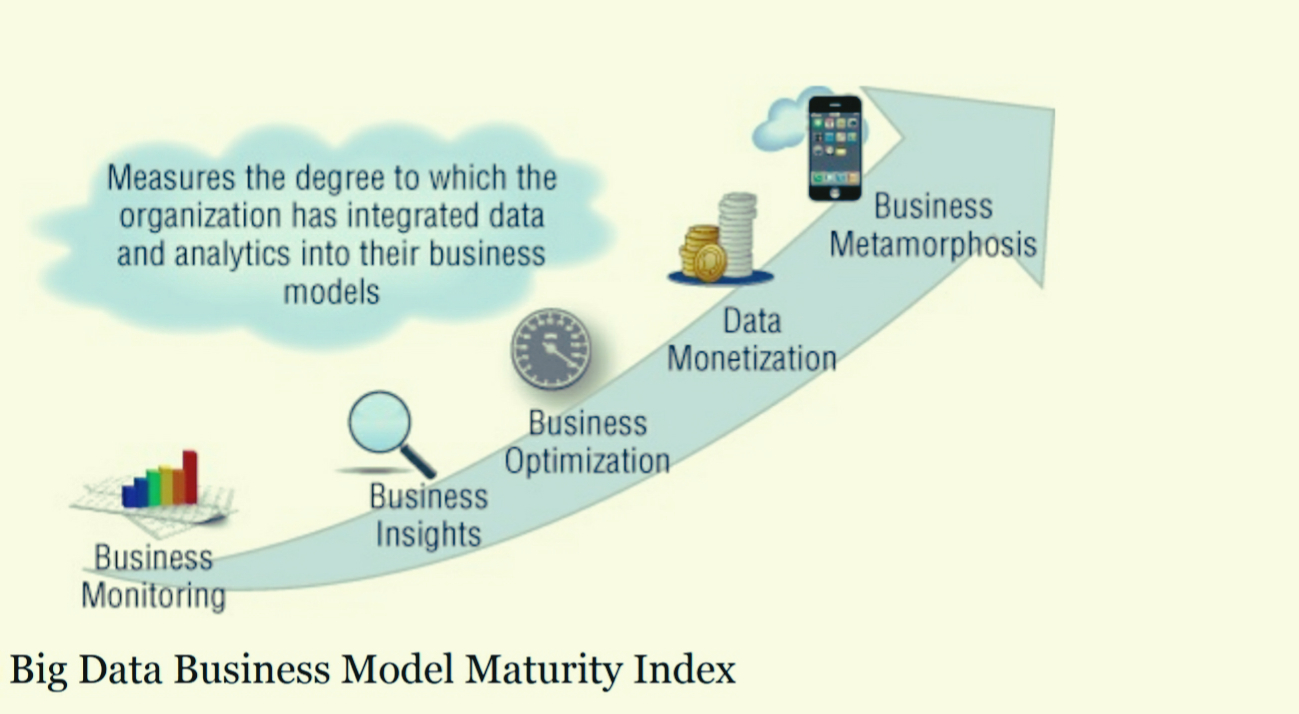

1.Protecting Sustainable Infrastructure: Cybersecurity is vital for safeguarding critical infrastructures like smart grids and transportation networks, ensuring uninterrupted essential services and minimizing environmental harm. 2.Data Privacy and Green Computing: AI enhances data privacy through advanced encryption and optimizes energy use via intelligent resource allocation, supporting eco-friendly computing. 3.Sustainable AI Development: AI optimizes energy consumption in sectors like transportation but must maintain security to prevent vulnerabilities. Robust cybersecurity across the AI lifecycle is critical. 4.AI-Enabled Threat Detection: AI and machine learning analyze real-time data to identify cyber threats efficiently. AI-driven threat detection enhances cybersecurity against evolving risks. 5.Ethical Considerations: As AI advances, ethics gain importance. Fair, transparent, accountable AI systems are crucial, especially in cybersecurity. Bias-free algorithms with explainable, auditable decision-making promote responsible, sustainable cybersecurity. IaaS (Infrastructure as a Service) offers virtualized computing resources on a pay-per-use basis, saving costs on hardware and enabling scalable app deployment. AIaaS (Artificial Intelligence as a Service) builds upon IaaS, making AI integration accessible without AI expertise. Major cloud providers like AWS, Google Cloud, Azure, and IBM serve as the IaaS foundation, providing essential computing resources for AI tasks. By using these platforms, organizations gain access to high-performance computing, scalable storage, and networking for AI. Cloud providers also offer AI-specific services like pre-trained models and APIs for easier AI integration. AIaaS democratizes AI by making advanced artificial intelligence capabilities accessible and usable by a broader range of users, regardless of their technical expertise. Enterprises should consider AIaaS when looking to integrate AI without AI specialization. AIaaS monetization involves offering AI capabilities as a service, raising revenue through AI functionality. It offers accessibility, affordability, scalability, and faster time-to-market, enabling organizations to leverage AI effectively without extensive investments or technical know-how.

IaaS (Infrastructure as a Service) offers virtualized computing resources on a pay-per-use basis, saving costs on hardware and enabling scalable app deployment. AIaaS (Artificial Intelligence as a Service) builds upon IaaS, making AI integration accessible without AI expertise. Major cloud providers like AWS, Google Cloud, Azure, and IBM serve as the IaaS foundation, providing essential computing resources for AI tasks. By using these platforms, organizations gain access to high-performance computing, scalable storage, and networking for AI. Cloud providers also offer AI-specific services like pre-trained models and APIs for easier AI integration. AIaaS democratizes AI by making advanced artificial intelligence capabilities accessible and usable by a broader range of users, regardless of their technical expertise. Enterprises should consider AIaaS when looking to integrate AI without AI specialization. AIaaS monetization involves offering AI capabilities as a service, raising revenue through AI functionality. It offers accessibility, affordability, scalability, and faster time-to-market, enabling organizations to leverage AI effectively without extensive investments or technical know-how.

Enterprises should consider adopting AIaaS under the following circumstances:

1.Limited AI expertise: If an enterprise lacks in-house AI expertise or resources, AIaaS provides an opportunity to leverage AI capabilities without the need for extensive hiring or training. It allows organizations to benefit from AI technologies quickly and efficiently. 2.Cost considerations: AI development and deployment can be costly, involving investments in hardware, software, and skilled personnel. AIaaS offers a cost-effective alternative by shifting the expenses to a pay-as-you-go model, reducing upfront capital expenditures. 3.Scalability requirements: If an enterprise needs to scale AI initiatives rapidly or handle fluctuating workloads, AIaaS platforms provide the necessary infrastructure and flexibility to meet these demands. It enables organizations to align their AI capabilities with business needs efficiently. 4.Maintenance and Experimental Projects: Provide ongoing maintenance for AI models and offer custom development work for specific customer needs, charging separately for these services.AIaaS providers employ various monetization strategies, including pricing models and customized packages.

Additionally, some offer MLOps (Machine Learning Operations) as a separate service, encompassing best practices for managing machine learning models. MLOps costs can be part of the subscription fee or charged separately, depending on support and resource needs. Keep in mind that pricing strategies may vary among providers and industries. IaaS provides virtualized computing resources, while AIaaS leverages this infrastructure for easy AI integration. Leading cloud providers like AWS, Google Cloud, Azure, and IBM Cloud offer IaaS platforms, enabling seamless AI integration for various use cases. Other cloud service models include: PaaS: Complete application development and deployment platform, freeing developers from infrastructure concerns. SaaS: Subscription-based software applications delivered over the internet, eliminating local installation and maintenance. FaaS: On-demand code execution platform, abstracting infrastructure and scaling worries. DBaaS: Cloud-based database management for scalable, secure data storage. BaaS: Cloud-based data backup and recovery, eliminating on-premises backup infrastructure. DRaaS: Cloud-based disaster recovery, replicating critical systems and data for easy recovery during disasters. Organizations can select the service type that best suits their needs.

There are several types of attacks that can be launched against cloud services, including:

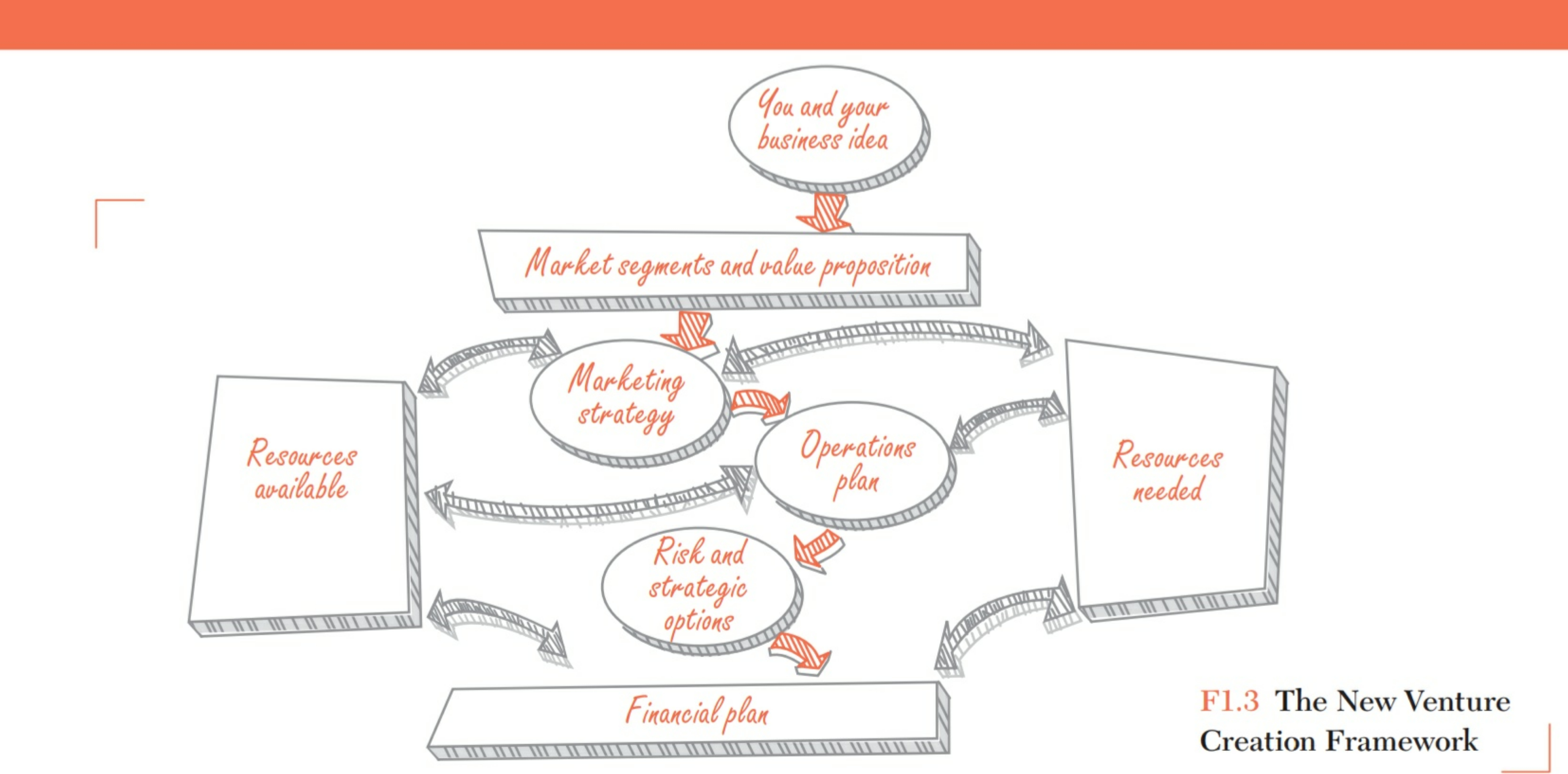

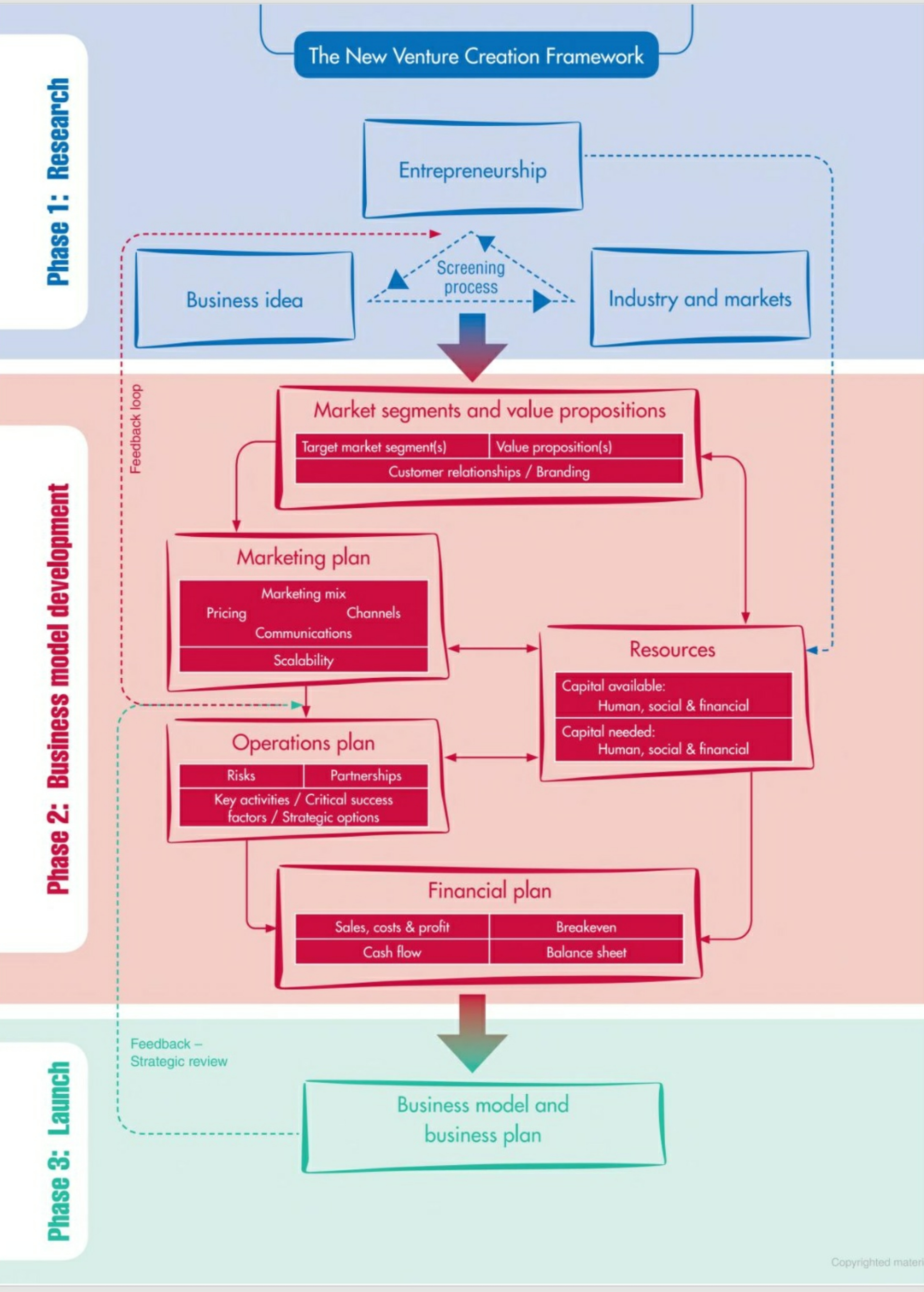

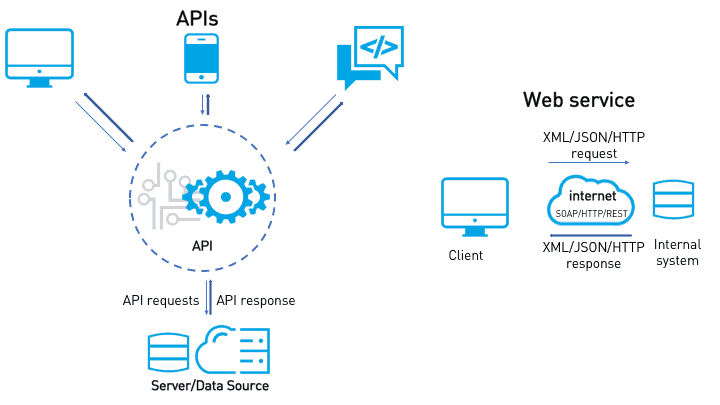

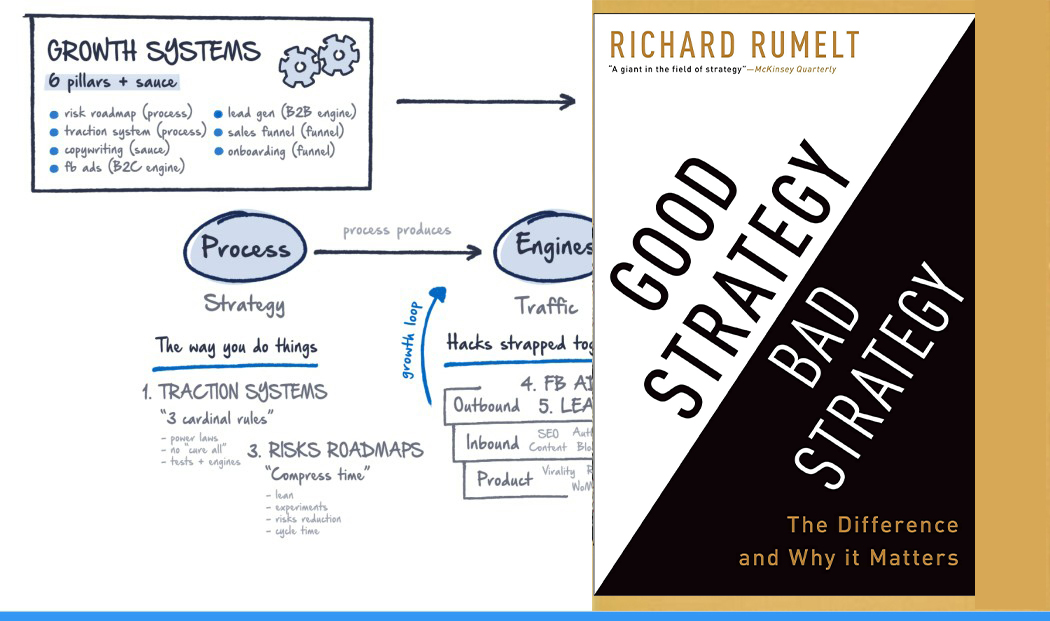

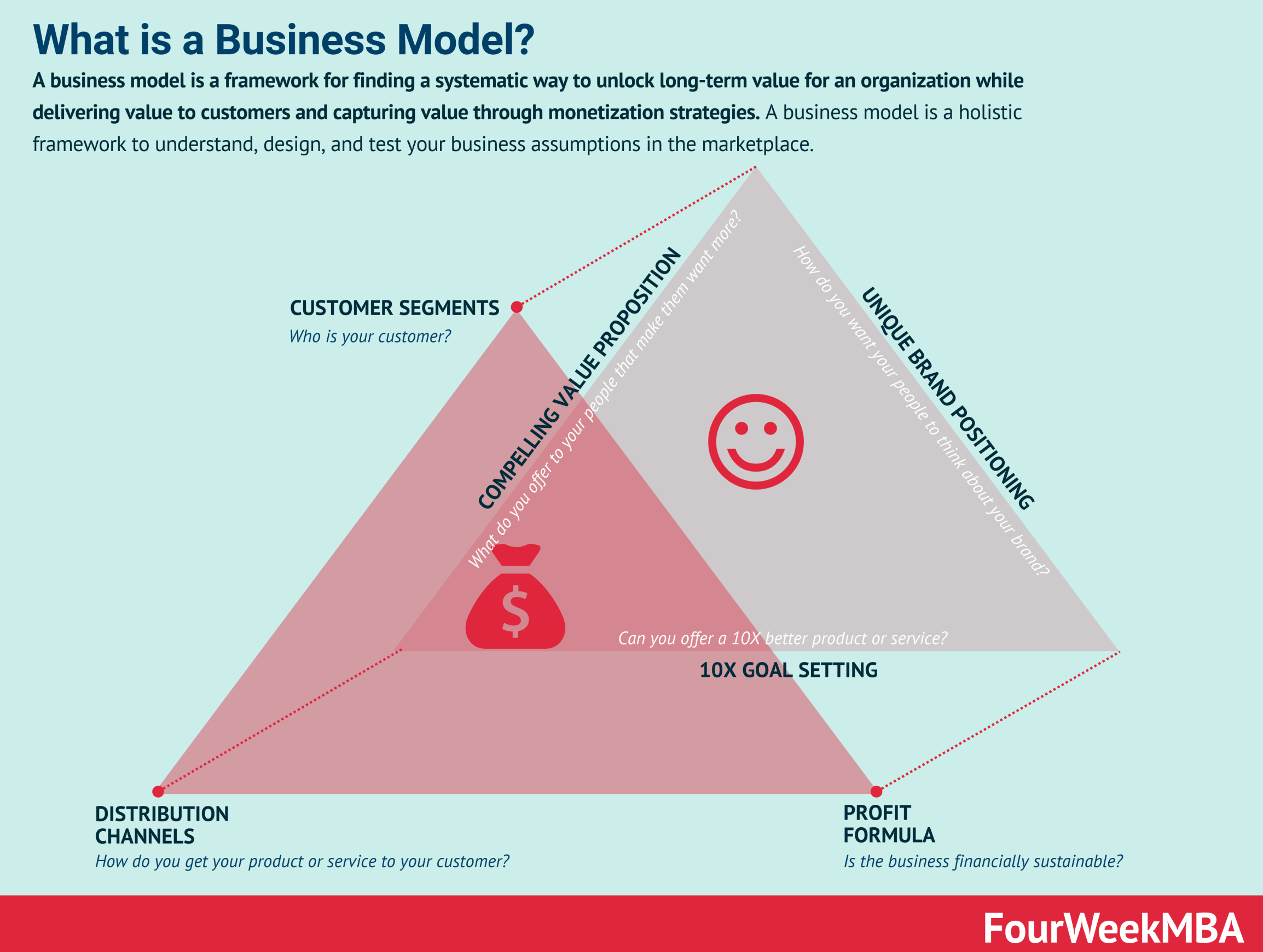

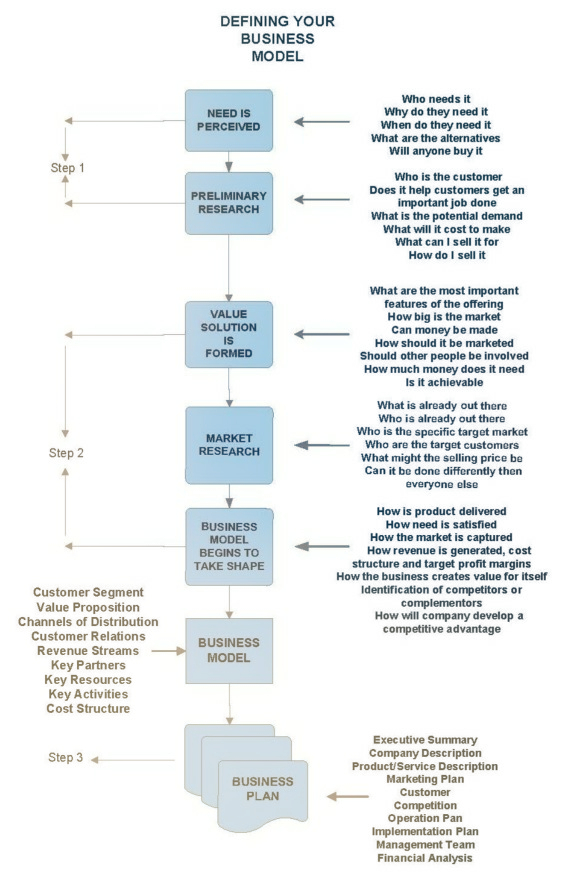

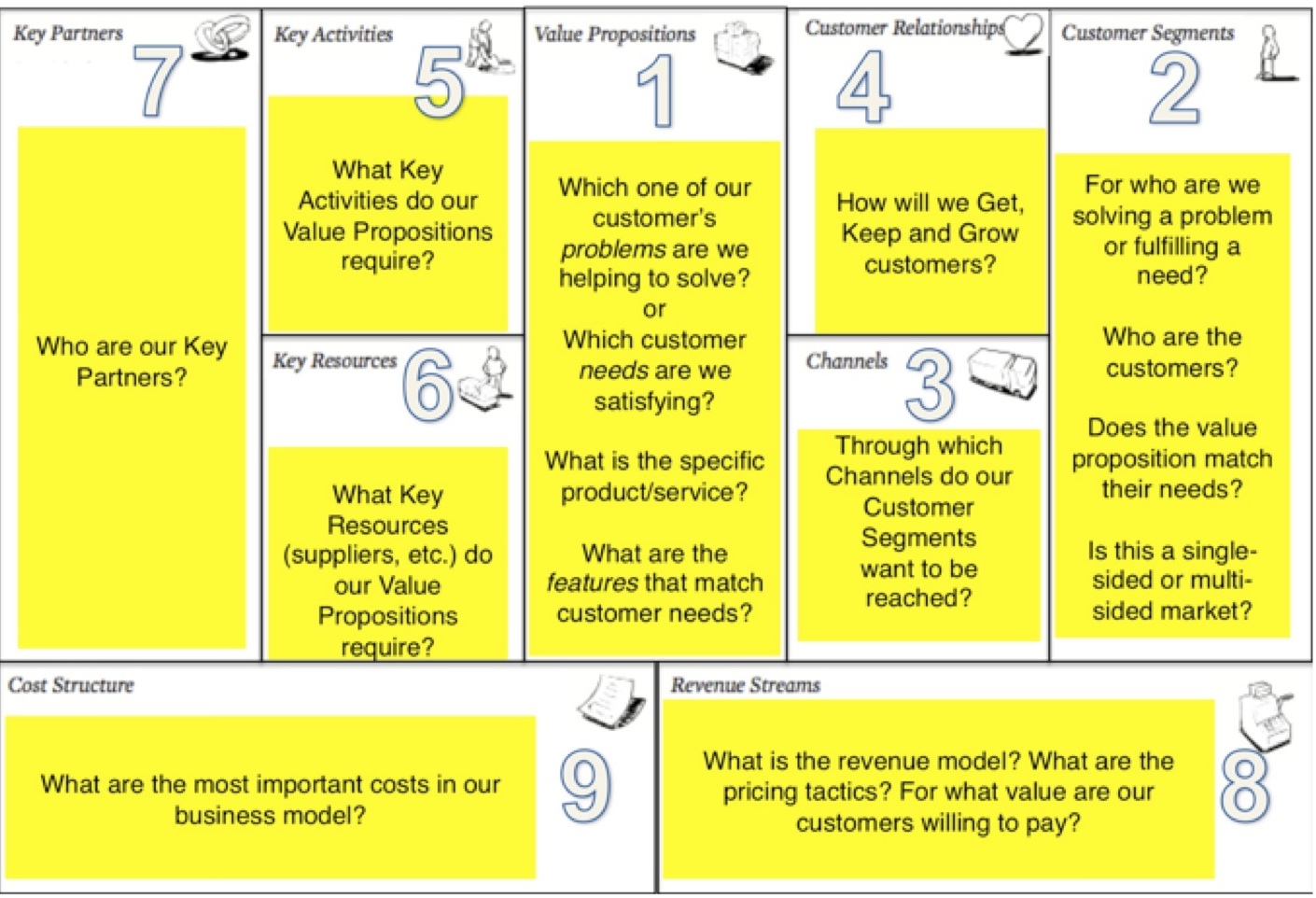

DDoS Attacks: Distributed Denial of Service (DDoS) attacks are a common type of attack on cloud services. In this type of attack, the attacker tries to overwhelm a cloud service with traffic from multiple sources, making it unavailable for legitimate users. Data Breaches: Cloud services are often used to store sensitive data such as personal information, financial data, and intellectual property. Data breaches occur when unauthorized users gain access to this data, either by exploiting vulnerabilities in the cloud service or by stealing login credentials. Man-in-the-Middle Attacks: In a man-in-the-middle (MitM) attack, the attacker intercepts and alters communication between two parties. This can allow the attacker to steal sensitive data, inject malware into the communication, or impersonate one of the parties. Malware Infections: Malware can be introduced into cloud services through several means, including infected software updates, malicious email attachments, and phishing attacks. Once installed, the malware can be used to steal data, control the cloud service, or launch additional attacks.Insider Threats: Insider threats occur when someone with authorized access to a cloud service misuses that access to steal data, cause damage, or launch attacks. This can include employees, contractors, and other authorized users.Cryptomining Attacks: Cryptomining attacks use a cloud service’s computing resources to mine cryptocurrency without the owner’s knowledge or consent. These attacks can slow down the cloud service and increase its costs. API Attacks: Cloud services often provide APIs (Application Programming Interfaces) to allow other software to interact with them. API attacks occur when an attacker exploits vulnerabilities in the API to gain unauthorized access to the cloud service or its data. Cloud services confront a myriad of security threats, spanning DDoS attacks, data breaches, man-in-the-middle attacks, malware infections, insider threats, cryptomining assaults, and API vulnerabilities, all of which imperil data integrity and system availability. Effective mitigation strategies involve implementing external network monitoring, conducting penetration testing, and enforcing robust internal security protocols. Particularly for Software as a Service (SaaS) providers, given the multi-tenant nature of their platforms, safeguarding against potential breaches compromising customer data is imperative. Addressing common vulnerabilities like misconfigurations and software flaws necessitates routine security assessments to ensure mitigation. As SaaS companies expand their operations, prioritizing cybersecurity becomes indispensable. Amidst the challenges of digital transformation in the 21st century, there's a pronounced tendency to prioritize technology adoption over strategic planning, often resulting in suboptimal alignment with long-term business objectives. Moreover, an undue emphasis on data quantity over quality can lead to decision-making based on incomplete or inaccurate information, exacerbating risks. Neglecting employee training and change management when automating processes risks workforce resistance and impedes technology adoption. Additionally, the absence of adequate cybersecurity infrastructure looms as a significant concern, underscoring the need for manufacturers to allocate sufficient resources to address cybersecurity risks effectively. By employing automated vulnerability scanners and conducting regular penetration testing, organizations can fortify their web applications, detecting and mitigating vulnerabilities throughout the development cycle, thereby ensuring robust protection against potential threats.THE BACKBONE GUIDELINE BUSINESS PLAN

PART I - Working with Teams | Accountability & Responsibilities.

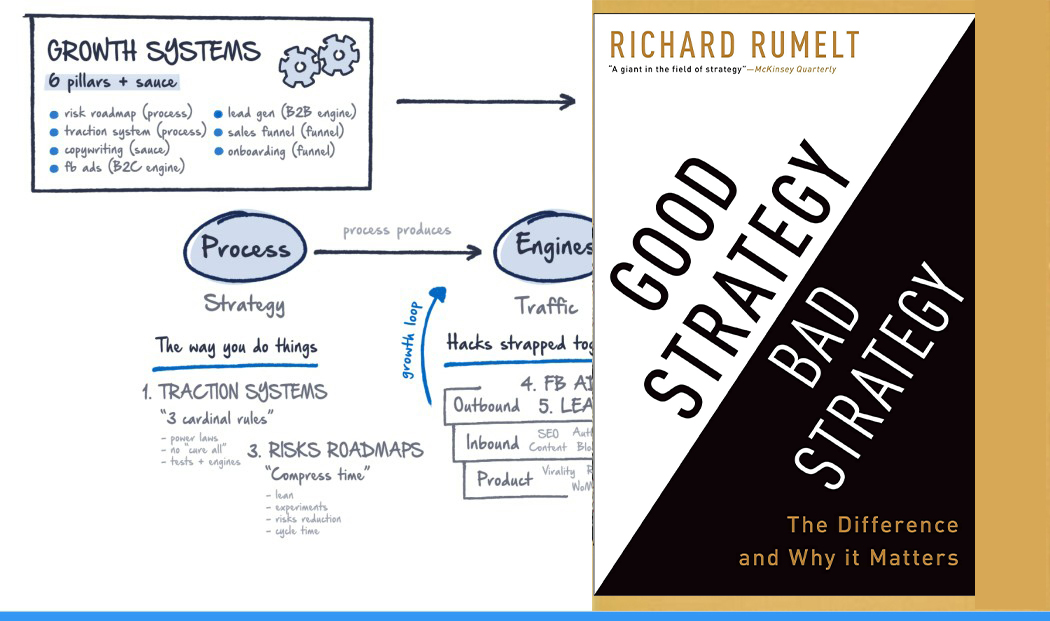

📅 BLENDS OF INSIGHTS - NEW GOALS REQUIRE NEW STRATEGIES - | Lx Feb, 2020